Year after year, satellites soar into space, each following their respective orbits, observing our planet from above. As of March 7, 2024, there are 9494 active satellites in various Earth orbits, with 1052 of these dedicated to Earth observation missions.

Of the total satellites currently active, 72% are considered “small satellites, which cover super-microsatellites, microsatellites, and nanosatellites. This increasing availability of Earth observation data means that our eyes in the sky are continuously collecting, storing, and sending data back to Earth.

The sheer volume of EO data being generated, estimated at 20TB per day from the Sentinel satellites alone, poses significant opportunities and challenges. In addition, the combined fleet of Sentinel-1, Sentinel-2, and Sentinel-3 have been widely adopted across industries—particularly for AI uses. Using AI in EO has now evolved from being an opportunity to becoming a necessity. The exponential growth in data volumes has transformed AI into an indispensable tool. The sheer magnitude of information generated by satellites necessitates the use of AI technologies for rapid and precise data processing. Without AI, it would be virtually impossible to efficiently analyze and extract meaningful insights.

This leads us to ask questions such as:

How do we know what kind of data is useful? What exactly is EO data quality? Why have scientific datasets like Sentinel been so widely adopted?

Understanding the definition of data quality and why scientific data is so often used over commercial data is often left unaddressed in the industry—which is why we’re focusing on it in this article.

Before we begin though, it’s important to mention upfront that yes – scientific datasets are free. This is a huge reason for their widespread use. At the same time, scientific datasets are ideal for efficient processing by automated algorithms. We’ll look at this further down in the article.

What is data quality?

When we think about data quality, it’s usually assumed that it refers to the often-mentioned spatial resolution. It is, though, a lot more nuanced than that, with many factors playing a role.

Let’s take a closer look at what we can call the three pillars of data quality:

1. Accuracy

This implies that there are minimal or negligible errors in the data. This could be the radiometric accuracy of satellite data influenced by atmospheric conditions, shadows, and topographic effects.

The spatial accuracy of satellite data is another type to consider. This can be influenced by lens distortion and time synchronization issues, and data processing policies of different providers and that can be improved through ground control point referencing.

2. Completeness

How complete data is can also vary depending on what sort of completeness of data you are considering. Spectral completeness looks at the extent to which a satellite sensor can capture and represent the full range of electromagnetic radiation across different wavelengths or spectral bands. Can we see beyond the range of the human eye?

Coverage completeness in EO data refers to whether a satellite sensor has observed and captured data over the entire area of interest with minimal missing information or spatial gaps. Essentially, how well the Earth’s surface, atmosphere, and other target areas have been captured. Cloud cover is a major factor affecting the completeness and usability of optical satellite data.

Lastly, day/night observation completeness in terms of data quality measures the ability of satellite sensors to capture data effectively regardless of whether it is daytime or nighttime. To achieve day/night observation complements, satellites are equipment with various technologies and strategies such as sensors operating in the visible and near-infrared spectral bands for daytime observations to cover detailed information about coastal features, land cover, and other surface characteristics. Nighttime observations present unique challenges due to the absence of sunlight, however, satellite sensors equipped with thermal infrared and active sensing techniques, such as synthetic aperture radar (SAR), can capture data during nighttime hours.

3. Consistency

Data quality seen through the lens of how consistent it is can point towards characteristics such as the revisit rate, viewing angles, or sun angles. The revisit rate refers to the frequency with which a satellite revisits a particular location on the Earth’s surface. For example, a high revisit rate means that the satellite passes over the same area frequently. This provides more opportunities to capture data and monitor changes over time.

Consistency in revisit rates such as this ensures that data is collected regularly, ensuring the detection of short-term changes, such as natural disasters, land cover dynamics, and vegetation growth.

Viewing angles, also known as incidence angles, refer to the satellite’s ability to observe areas off its direct ground track by adjusting its orientation in space. This is related to the satellite’s “agility” – its ability to modify its attitude to observe scenes outside its ground track. Some satellites, such as monitoring missions, are consistently NADIR-looking, while other agile satellites image at different angles, including some offering oblique image tasking (point-and-shoot imagers).

Sun angles refer to the angle at which sunlight strikes the planet’s surface relative to the observer’s position. Sun angles vary throughout the day and the year as seasons change. This influences illumination conditions and whether shadows are present in satellite imagery.

Consistency in sun angles means that satellite data is collected under similar lighting conditions, ensuring accurate comparisons and analyses across varying periods can be done.

The conversation around consistency needs to include the pushbroom vs point and shoot factors in providing quality. Together with the orbit and hardware, these form the foundation for high-quality data.

Data quality depends on your specific needs

After considering the three pillars of data quality, it’s clear that whether you’re judging data by how accurate, complete, or consistent it is—ultimately the definition depends on your needs.

The definition of ‘high data quality’ for human eyes such as in visual mapping use cases will differ from what computer/AI eyes consider to be high quality when seeing things that can’t be discerned with human eyes.

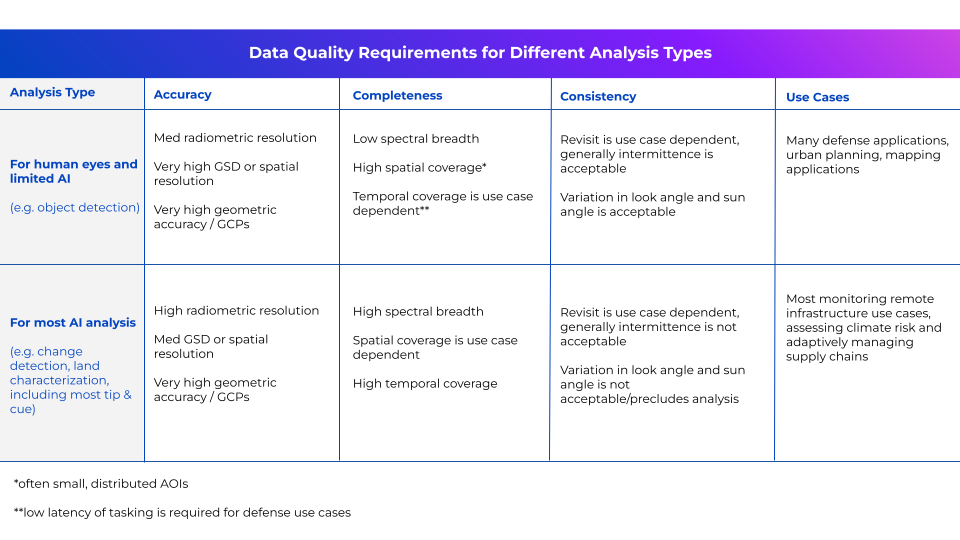

These data quality requirements for different types of analysis are crucial to consider when asking yourself what high-quality data is.

For most commercial data companies, high-quality data is usually seen as point-and-shoot tasking—looking at places at different times of the day with different elevation angles. This makes it difficult for computers to do analytics to extract information.

For most commercial data companies, high-quality data is usually seen as point-and-shoot tasking—looking at places at different times of the day with different elevation angles. This makes it difficult for computers to do analytics to extract information.

On the other hand, with many scientific satellites, such as the Sentinel fleet, there is no tasking, but rather a standardized acquisition that makes it not only easier to assess with analytics, but the results of those analytics are then more reliable and interpretable.

This is because the more variables that are controlled for in the measurement and observation, the better the measurement.

Why have scientific datasets been so widely adopted for AI?

The Sentinel fleet

As mentioned above, scientific satellites have standardized acquisition, which makes data access and analysis easier. The Sentinel satellites developed by the European Space Agency (ESA) as part of the Copernicus program, comprise a fleet equipped with different sensors and capabilities to monitor various aspects of the Earth’s environment. For example, Sentinel-1 consists of two C-band SAR satellites (Sentinel-1A and Sentinel-1B) that provide all-weather, day-and-night radar imaging for applications such as climate studies and emergency response. Sentinel-2 comprises a pair of optical imaging satellites (Sentinel-2A and Sentinel-2B) equipped with multispectral sensors that capture high-resolution imagery across 13 spectral bands, including visible and near-infrared. Sentinel-2 data is used for urban planning, water quality assessment, and forestry management.

The Sentinel satellites, as well as its esteemed American counterpart, the Landsat mission, contribute to a wide range of applications and are an invaluable tool for policymakers, scientists, and the public to make informed decisions and address global environmental challenges.

The importance of scientific datasets in AI applications

Satellite data is currently undergoing an AI revolution. Thanks to artificial intelligence and machine learning techniques, we’re able to extract insights much easier, quicker, and more reliably – than ever before. However, with this comes challenges. Let’s consider some of these and how the characteristics of scientific EO datasets meet these.

Deep Learning or Computer Vision:

Deep learning algorithms require large amounts of high-quality data for effective training. The main challenge with using these is the lack of large, labeled datasets for training the algorithms.

This is where scientific datasets shine. Scientific EO data’s consistency and completeness provide a robust training base for these algorithms, leading to more accurate and generalizable models in tasks like object detection, semantic segmentation, and image classification.

Deep learning models are particularly data-hungry. They typically require thousands of labeled images per class to achieve good performance. The vast archives of historical data available from scientific EO missions provide a significant advantage over commercial EO data, which may have limited temporal coverage or focus on specific areas of interest—which inherently creates bias in a neural network.

Anomaly or Change Detection:

Consistent and accurate data allows AI models, particularly change detection, to compare feature representations across time that generalize well to unseen data.

Variations in commercial EO data, such as those arising from different sensors, viewing geometries, or illumination conditions, can lead to models that overfit specific acquisition conditions and perform poorly in any case of multi-temporal analysis. Such variations mean that doing anomaly or change detection on commercial sets can often lead to inaccuracies and can be a lengthy process.

In contrast, the standardized nature of scientific EO data ensures that the features observed in the AI and any changes detected are meaningful and transferable across different locations and time periods.

Clustering:

Unsupervised learning techniques like clustering rely on identifying inherent groupings within the data. Clustering is widely used for analyzing and extracting insights from data so that patterns, structures, and relationships can be identified. A key challenge in applying clustering is dealing with data with high dimensionality, noise, and missing values.

The standardization of scientific EO data means that identifying meaningful clusters representing natural phenomena is easier than with commercial datasets. With its variations in acquisition parameters, commercial data inconsistencies can lead to clusters that are artifacts of these variations rather than true underlying patterns.

For example, a clustering algorithm applied to commercial EO data with varying sun angles might identify clusters that correspond to these sun angle variations instead of actual land cover types.

Commercial adoption of scientific datasets

Scientific datasets like Sentinel have been widely adopted by companies in sectors such as agriculture, insurance, land management, and water resource management. Why is that?

1. Availability and accessibility

Scientific datasets such as the Sentinel satellites provide “free and openly accessible EO data”. Such an open-access model encourages a wider user community to access and derive insights from satellite data. This includes commercial companies looking to innovate in their fields of interest by tapping into large volumes of free and high-quality EO data.

This availability and accessibility means that new opportunities are opening up in the commercial world for satellite data to be used by people who are new to the industry, beyond just experts.

2. Data characteristics and quality

Considering the technologically diverse nature of each of the Sentinel satellite’s sensors, advanced instruments such as the Sea and Land Surface Temperature Radiometer (SLSTR), provide high-quality data that can be costly and tricky to find elsewhere.

Another important data quality characteristic to consider is the ability of active sensors like SAR to provide reliable data regardless of cloud cover or day/night conditions—a key advantage for many commercial applications.

3. Diverse applications

Scientific datasets usually come with an incredibly wide range of potential commercial applications, including forestry, fishing, energy, insurance, urban development, agriculture, and many more.

This combination of EO data with other types of non-space data can lead to innovative commercial user applications and insights. Essentially, companies can use scientific datasets as their reliable and resourceful tool in their product-building toolbox.

4. Cost-effectiveness

When starting a business from scratch or scaling one already grown, cost-effectiveness is an important factor driving the adoption of scientific EO datasets by commercial companies.

Having free and open-access EO data with the click of a button is a game-changer for many businesses today. Scientific datasets such as Sentinel can provide better value for money compared to commercial data procurement, especially when purchased in larger quantities. Satellite data is traditionally known to be notoriously difficult to access, with many hoops to jump through and costs to consider. Scientific datasets, on the other hand, offer a low-risk way to create EO products and services overnight.

Some companies using scientific datasets to make an impact include:

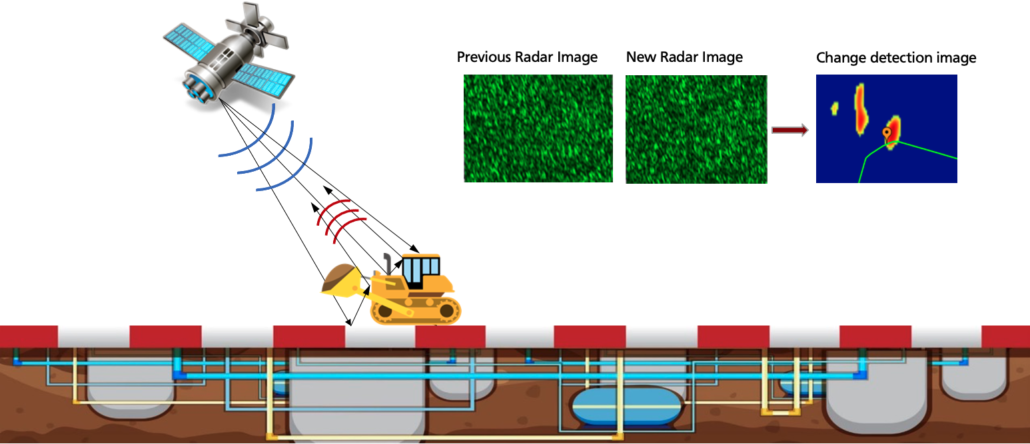

- Orbital Eye uses Sentinel-1 for their CoSMiC-EYE solution which automatically processes all collected satellite data, and its algorithms detect activities that could potentially damage pipelines. The technology uses change detection algorithms to detect third-party interferences in the pipeline corridor—automatically producing a change map.

Classical- and AI-based filtering of irrelevant changes (Orbital Eye)

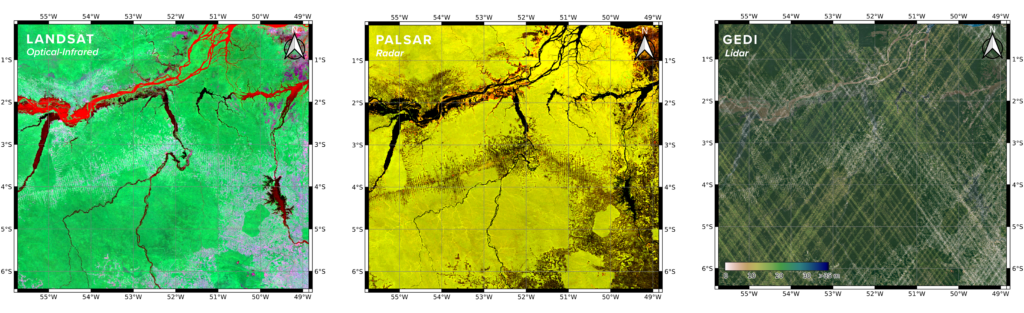

2. Pachama uses ESA’s Sentinel-2 and NASA’s GEDI (Global Ecosystem Dynamics Investigation) lidar for their forest height and carbon mapping. They observe the “greenness” of the forest canopy thanks to Sentinel-2 optical-infrared data, painting a picture of the amount of leafy material and chlorophyll present. The picture is complete when combined with NASA GEDI data, mounted on the International Space Station. GEDI lidar produced a vertical profile of the forest, bringing 3D structural information to enable Pachama to improve the accuracy of their forest carbon mapping.

Pachama uses three types of space-borne observations to map canopy height and carbon over large regions (optical-infrared, radar, and lidar). (Pachama)

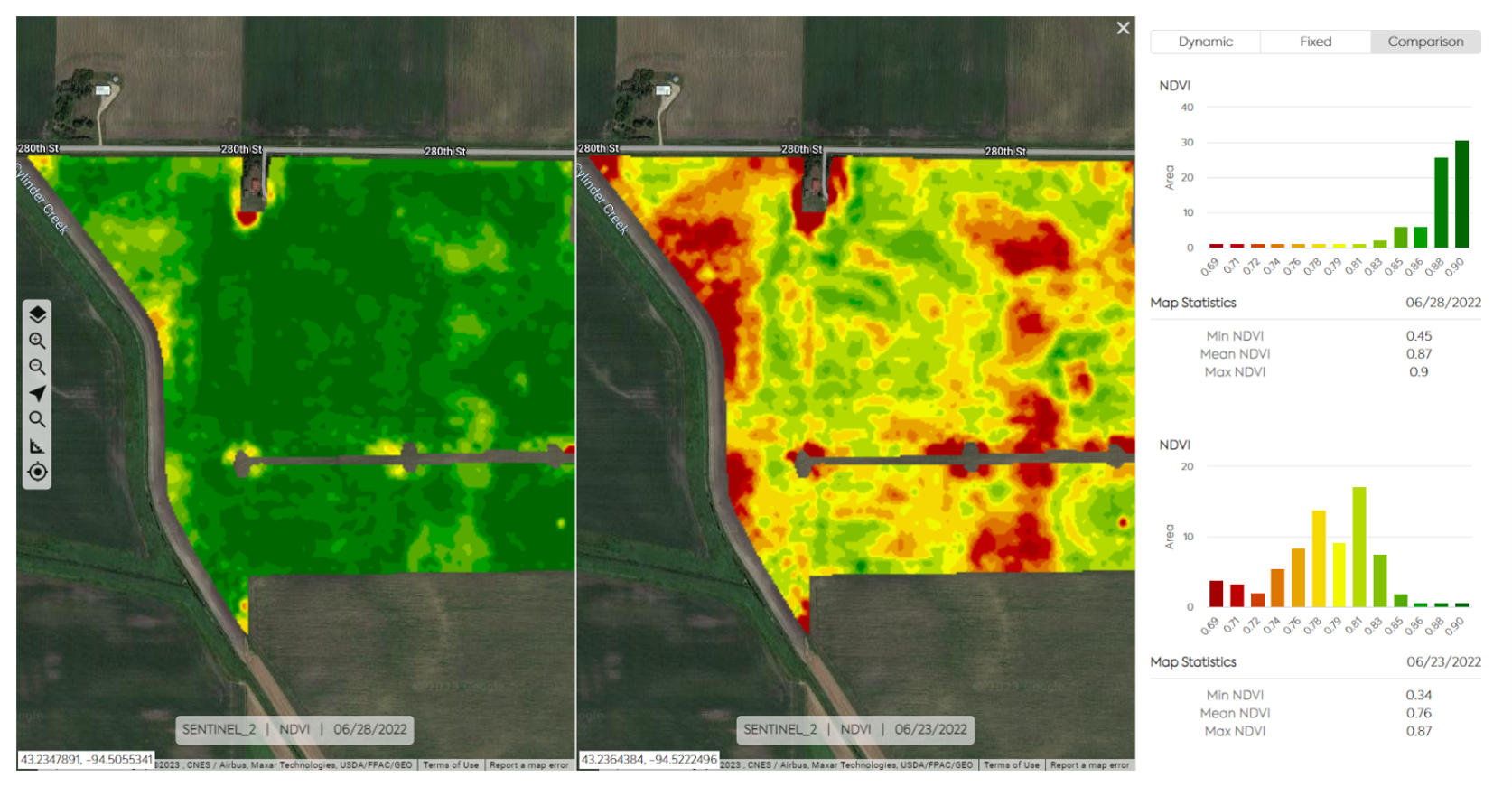

3. EarthDaily Agro uses ESA’s Sentinel-2 and other data sources to feed their precision agriculture, crop insurance, and commodity trading analytics. By integrating the Sentinel-2 data with other satellite sources like Landsat, EarthDaily Agro gains a comprehensive, high-frequency view of agricultural conditions globally. As a result, they provide timely insights to customers across industries. For crop insurance, EarthDaily Agro leverages Sentinel-2 data to verify planting dates, track crop progress, and forecast yields.

Their cloud-based platform simplifies the process of accessing and integrating Sentinel-2 data for customers creating their own applications and models.

How can we bridge the market gap between scientific datasets and commercial datasets to unlock more AI applications?

1. Recognize where needs are being met and where they aren’t

Earlier, we discussed several use cases where scientific datasets are successfully leveraged to solve pressing global challenges. As for commercial datasets, one well-proven example. Urban planning projects benefit from high spatial resolution data for frequent and detailed monitoring of urban development and infrastructure changes. Comprehensive snapshots of the area, even with just one or two images per year can suffice.

For example, a city might use commercial datasets to monitor the construction progress of new buildings or to document informal settlements. This use case for existing commercial datasets is viable for several reasons: 1) urban areas are more frequently imaged by commercial providers, and 2) the relatively small area under observation means pre-processing demands can be minimal, making human-in-the-loop analysis feasible.

In contrast, scientific datasets, while beneficial, fall short in certain applications like orchard monitoring. Consistent imaging at the same time of day is crucial for orchard management to ensure constant temperature and spectral signatures, which are vital for accurately monitoring tree health and growth.

These high-quality sensors provide good spectral information necessary for assessing vegetation health, detecting diseases, and managing irrigation. However, the infrequent acquisition of these satellites is a significant limitation. Growers, crop insurers, and commodity traders need to monitor critical changes such as flower emergence, pest infestations, and moisture levels, which can occur rapidly and require timely intervention.

The relatively infrequent revisits of scientific datasets may miss these crucial moments, leading to delayed responses and potential yield loss. Additionally, the spatial resolution might not be sufficient to detect small-scale changes in individual trees or rows, which are often essential for precise orchard management.

2. Improve data interoperability between commercial and scientific datasets

One key way to bridge the market gap between scientific and commercial Earth observation datasets is to focus on data interoperability.

Scientific datasets like Sentinel offer high-quality, consistent, and openly accessible data, while commercial datasets often provide more specialized or customized data products tailored to specific industry needs.

By developing standards and protocols that enable seamless integration and exchange of data between these two data ecosystems, businesses can leverage the strengths of both to create more comprehensive and powerful AI applications.

This could involve standards in accessibility like SpatioTemporal Asset Catalog (STAC) and standards in data quality like Analysis Ready Data (ARD) across commercial data providers, as well as greater or improved API accessibility for scientific datasets.

3. Create new commercial satellites with standardized acquisitions

How can the integration of consistent acquisition times and high-frequency data collection improve the accuracy and reliability of AI models in earth observation, and what new applications could this enable?

One company in particular, EarthDaily, posits that the advancements in imaging technology with enhanced spectral breadth and signal-to-noise ratios, combined with scientifically accurate satellite calibration and processing, can revolutionize change detection and AI integration in the EO market, particularly in sectors underserved by existing datasets.

For example, this new dataset could address the aforementioned orchard flower or disease emergence detection problem. Another application is disaster response. Companies can leverage high revisit rates and high spectral resolution data, like existing commercial datasets, for disaster response by focusing on the rapid and detailed monitoring of affected areas.

When the location of a disaster is known, and a large company has a significant contract with various providers, timely acquisition of a tasked dataset becomes feasible. For instance, in the aftermath of a natural disaster such as a hurricane or earthquake, existing commercial datasets can quickly assess the extent of damage to infrastructure, monitor the progression of floodwaters, and identify areas that require immediate humanitarian assistance.

The high revisit rate ensures that changes are captured frequently, allowing responders to track the situation as it evolves and deploy resources more effectively. This frequent and detailed data collection supports better decision-making in real-time, enabling a swift response to mitigate further damage and coordinate relief efforts.

The high spectral resolution also aids in distinguishing between different materials and assessing the impact on vegetation, buildings, and roads, providing comprehensive situational awareness that is crucial for effective disaster response.

However, significant limitations arise when the disaster’s location is unknown, or the issue requires broad-area analysis, ruling out reliance on human-in-the-loop analysis.

This is the case with drought monitoring, pest and disease monitoring, many maritime risk use cases, and more.

With the advent of a new dataset that integrates the consistent acquisition model and data quality of scientific datasets with high-frequency revisit and improved spatial resolution akin to commercial datasets currently available on the market, Earth observation users could monitor broad areas, reliably and efficiently with AI.

Once an area of concern is identified, they could cue the acquisition of one of the many responsive commercial datasets with higher spatial resolution to zoom in on the issue and provide comprehensive situational awareness.

Conclusion

The Sentinel and Landsat missions underscore the pivotal role of scientific datasets in driving innovation and addressing global challenges through AI-enabled analysis.

However, while these datasets offer unparalleled accessibility, global coverage, and consistency, there remains a critical gap in the market concerning the resolution and revisit frequency required for early change detection and dynamic monitoring. Bridging this gap will unlock new opportunities for leveraging EO data in commercial applications, enabling more effective and timely insights into our dynamic planet.

What’s clear though, is that the industry continues to scour terabytes of imagery captured by scientific fleets to meet their and their customers’ needs. Where will the next 5-10 years take us? We look forward to finding out!

This article was produced in collaboration with EarthDaily Analytics and co-authored by Nikita Marwaha Kraetzig and C. Elizabeth Duffy.

Did you like the article? Read more and subscribe to our monthly newsletter!