NeRF Explained: Making High-Detail 3D Maps from Simple Photos

In the world of 3D mapping, we face a big problem. How do you turn hundreds of scattered, flat photographs into a seamless, realistic, and navigable digital world? We take thousands of drone photos and ground shots of a new development or a historic site, but stitching them together into a smooth, life-like 3D model is often slow and computationally expensive. The results rarely look quite right.

Enter Neural Radiance Fields, or NeRF. This AI-powered technique is rapidly changing the expectations for what a digital twin should look like. NeRF offers a truly modern solution, using deep learning to reconstruct incredibly complex 3D scenes from a simple collection of 2D images. This new approach is less about measured geometry and more about learned light, giving us a digital reality layer that is stunningly photorealistic. Let’s answer key questions about NeRF in a geospatial context: how it turns ordinary photos into 3D worlds.

As the camera pose changes, NeRF generates the corresponding view. Source: dtransposed

1. How NeRF Starts From Photos?

Traditionally, we build 3D worlds using explicit geometry, with huge files packed with meshes and textures. NeRF takes a completely different approach. It collects photos of a location, figures out where each camera was positioned, and trains a small AI network to understand how the scene looks from every angle imaginable.

Instead of storing massive geometric files, NeRF encodes the entire scene directly within the neural network’s memory. This is a huge advantage for mapping. While traditional 3D models grow huge as you capture city-scale detail, NeRF maintains high visual resolution without the proportional increase in file size. This makes it ideal for cloud-based digital twins where highly detailed scenes need to stream efficiently to users.

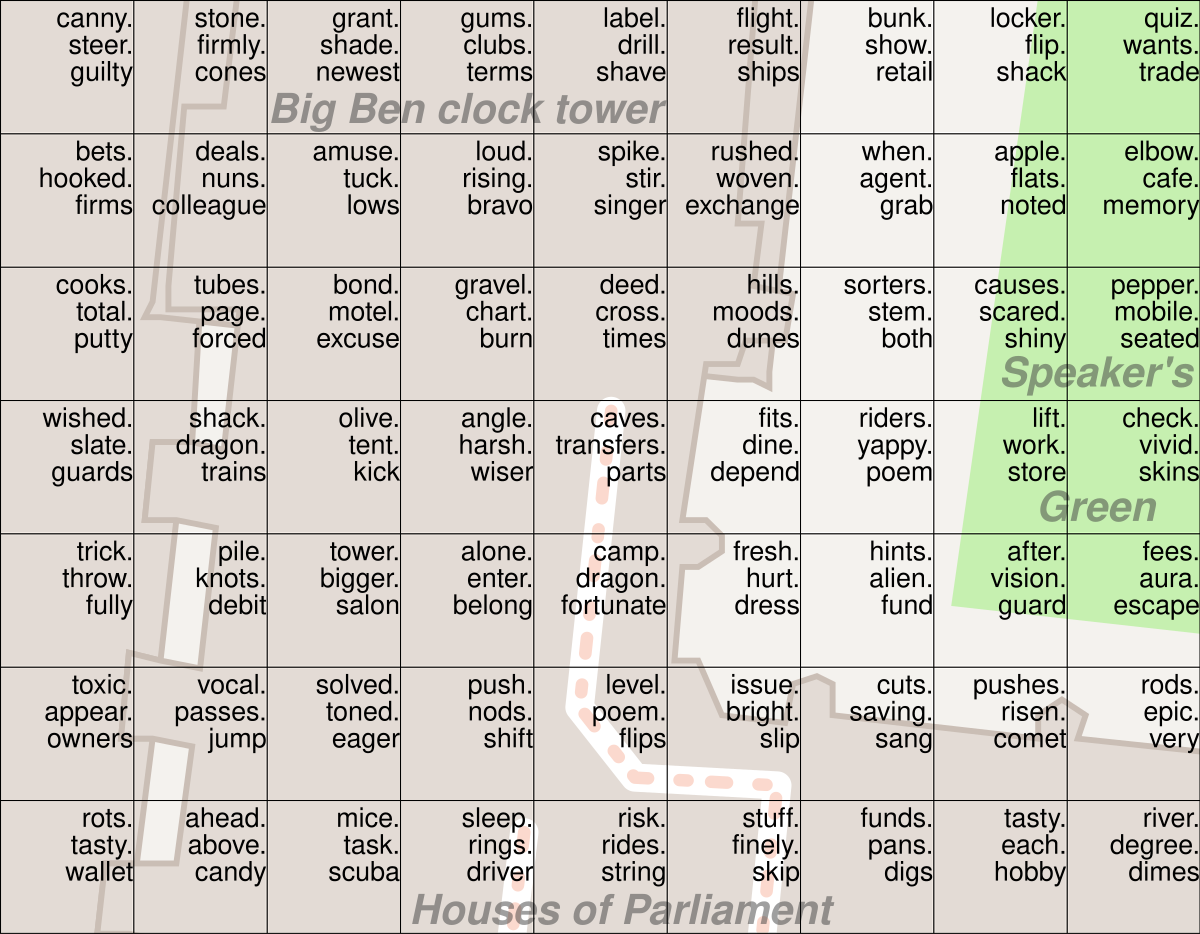

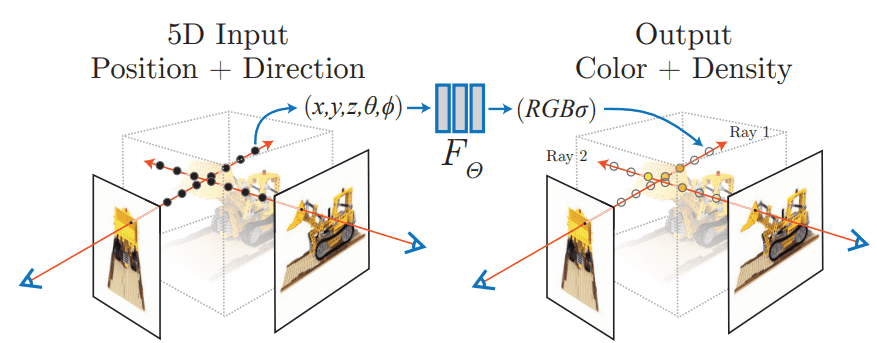

2. What a NeRF Really Does? Learning Light and Density in Volumetric Space

To create a smooth and realistic 3D scene, NeRF first learns the physics of the environment. The network trains itself to predict the color of light radiating in any direction from any point in 3D space.

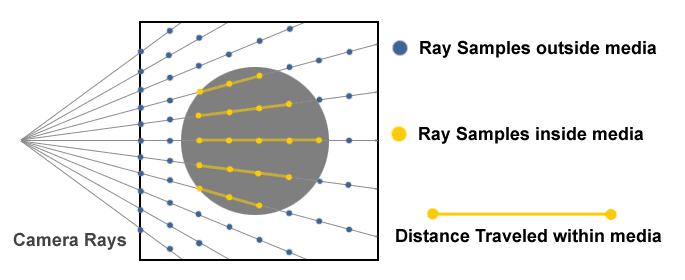

The process is simple. The AI queries every spot in the scene based on its 3D location and the direction from which it is being viewed. For each point, it predicts two key values: the RGB color and the volume density, which indicates how solid or opaque that spot is. To render an image, NeRF uses differentiable volume rendering. Virtual rays are cast through the scene, sampling and accumulating color and density along each ray until the final pixel color emerges.

Neural Radiance Fields. Source: Mildenhall et al.

A big part of why NeRF looks so realistic is that it explicitly includes the viewing direction. This allows it to capture complex, view-dependent optical effects, such as reflections and highlights, that change naturally as the camera moves. The result is a lifelike and dynamic scene that feels truly three-dimensional.

Ray Marching. Source: Creating a Volumetric Ray Marcher by Ryan Brucks

3. Why NeRF Feels Magical for Mapping?

NeRF’s ability to create a navigable, continuous, and photorealistic 3D environment represents a breakthrough for geospatial visualization. It generates highly realistic images with a level of detail difficult to match with traditional 3D methods. The network naturally models complex lighting physics, handling uneven or dramatic lighting conditions better than older techniques. Since surfaces are continuous, NeRF avoids the blockiness or jagged edges that can appear in conventional polygon models.

Another advantage is how the AI handles inference. Traditional photogrammetry requires high overlap between photos to avoid gaps, but NeRF can synthesize plausible and visually accurate data in areas with sparse coverage. This ability to fill in missing information is essential for rapid content production. Additionally, NeRF’s view synthesis allows users to move smoothly through the reconstructed scene, generating new camera angles that were never captured in the original photos. This capability is crucial for immersive applications and modern digital twin experiences.

4. How NeRF Is Different From Photogrammetry?

For geospatial professionals, this distinction is critical. NeRF and photogrammetry are not competitors, but complementary tools. Traditional photogrammetry triangulates points to create explicit models, producing dense point clouds and exportable meshes. This approach prioritizes verifiable accuracy, which is essential for engineering and metric-grade analysis.

NeRF, by contrast, uses deep learning to infer the scene as a volume density function. Its strength is in rendering realism, not measurement. The meshes produced by NeRF are often unstable and noisy, and not suitable for reliable geometric analysis. In practice, photogrammetry provides the accurate geometric backbone, while NeRF adds a high-fidelity visual layer on top. The future of mapping will focus on combining these two technologies to achieve both accuracy and photorealism.

5. Where NeRF Helps in Real Geospatial Work?

NeRF is moving into mission-critical applications where high visual fidelity and immersive experiences are essential. One of its main uses is in building the “Digital City” layer of sophisticated urban digital twins. By capturing the detailed material and texture of urban scenes, NeRF ensures that virtual models are perceptually accurate, which is crucial for intelligence, analytics, and simulation tasks.

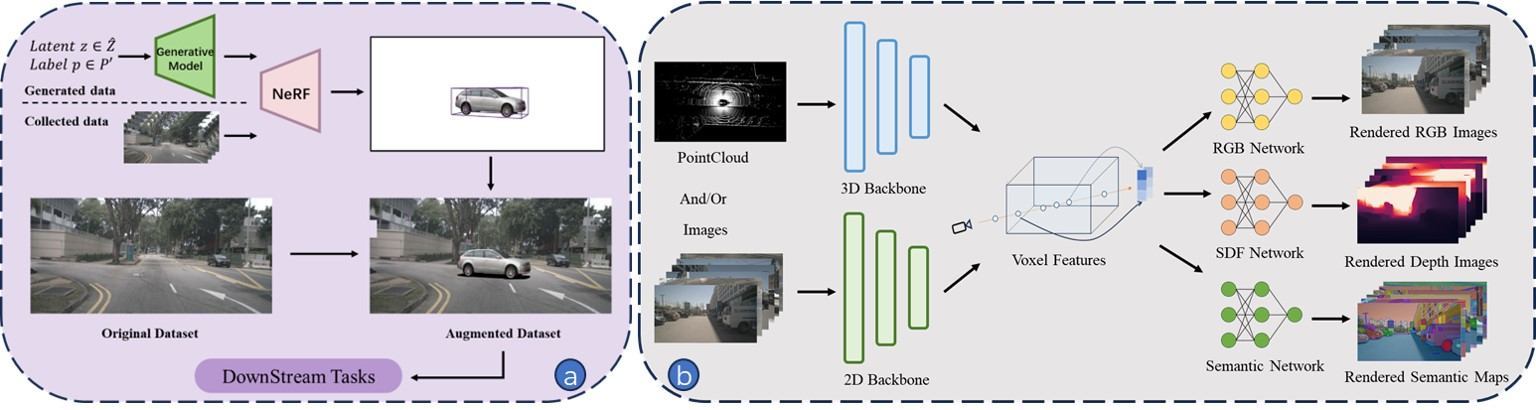

A particularly valuable use of NeRF is in creating synthetic but photorealistic training environments for advanced AI systems, including autonomous vehicles. Some companies, such as Wayve Technologies, are exploring large-scale neural rendering techniques to simulate real driving environments with far greater visual realism than traditional simulators. By learning how light behaves across real-world surfaces, NeRF can produce scenes that closely match the look and feel of actual streets, giving engineers richer and more realistic data for testing and training their models.

Overview of NeRF’s application in autonomous driving perception: (a) NeRF can be used for data augmentation by reconstructing scenes from either generated data or collected real data. (b) NeRF’s implicit representation and neural rendering can be integrated into model training to enhance performance. Source: Lei He et al.,

NeRF methodologies are also being explored to improve diagnostic processes. For example, in building facade inspections, NeRF can integrate high-resolution RGB data with thermal infrared imaging. This combination enhances the visualization of anomalies such as thermal leaks or cracks by improving the alignment and localization of these defects in three-dimensional space. This ability to combine multiple data types into a seamless 3D representation demonstrates NeRF’s potential for both urban planning and engineering applications.

6. What Still Holds NeRF Back?

Despite its many advantages, NeRF still faces significant technical challenges, especially around computational requirements, scalability, and integration with existing tools. Historically, one of the biggest hurdles was the intense computational cost and extremely long training times. Training a single scene could take tens of hours or even days on powerful hardware. Although recent advancements have improved training speed, the volumetric rendering process itself still demands substantial computational resources.

NeRF was originally designed for small objects, so applying it to massive outdoor or city-scale scenes introduces major scalability challenges. Researchers have developed specialized architectures, such as Block-NeRF and PatchNeRF, which divide the scene into multiple networks to manage complexity. Additionally, the way NeRF represents scenes creates a structural mismatch with existing GIS and engineering tools. Until standardized integration methods are developed, NeRF cannot yet be fully trusted for metric-grade analysis.

7. What the Future Might Look Like?

The future of Neural Radiance Fields is focused on faster performance and real-time applications. Computational barriers are falling rapidly. For example, NVIDIA’s Instant-NGP has dramatically accelerated the training process. With faster training, attention is now shifting toward rendering speed, which has led to the development of techniques like 3D Gaussian Splatting (3DGS). This method represents scenes using explicit Gaussian primitives, allowing high frame-rate rendering that is essential for real-time virtual reality and interactive applications.

Looking ahead, professional 3D mapping will likely combine NeRF and photogrammetry in a hybrid workflow. Photogrammetry will continue to provide an accurate geometric framework, while NeRF or 3DGS will supply the hyper-realistic visual layer. Companies like Pix4D, a leader in photogrammetry software, are exploring this integration. This approach will enable cloud-based tools where users can generate and stream NeRF scenes like high-definition video on standard machines, opening the door to instant, immersive 3D experiences.

Try It Yourself: Resources for Understanding Radiance Fields

The best way to understand NeRF is to experiment with it. For the easiest start, cloud-hosted platforms like Luma labs AI or Nerfstudio allow quick generation of photorealistic 3D scenes using uploaded smartphone images or video. If you have a strong GPU and prefer to run things locally, NVIDIA’s Instant-NGP is the industry-standard implementation for fast NeRF training. Video tutorials, such as this guide, make getting started easier.

For those who want to dive deeper, minimalist approaches and tutorials explain the core algorithm in detail. Finally, exploring 3D Gaussian Splatting is highly recommended for understanding the current state of real-time, high-performance rendering.

NeRF marks an important shift in how we create and experience 3D content. It pushes visual realism to a level that traditional methods rarely reach, bringing digital environments much closer to how we see the real world. For the geospatial field, the key point is that NeRF expands what is possible rather than replacing trusted, metric-grade mapping techniques. When NeRF is used for cinematic visualization and immersive digital twin experiences, and photogrammetry provides the accurate geometric backbone, the result is a new class of hybrid 3D products. These models are both precise and visually convincing, offering a richer and more lifelike way to understand complex spaces. The momentum behind this technology is only growing, which raises an exciting question: how far can these hybrid workflows go as we push toward truly real-time, city-scale digital worlds?

Related articles:

- https://nerfacc.com/what-is-nerf-understanding-neural-radiance-fields-in-simple-terms/

- https://aws.amazon.com/what-is/neural-radiance-fields/

- https://blogs.nvidia.com/blog/instant-nerf-research-3d-ai/

- https://www.tooli.qa/insights/neural-radiance-fields-nerf-a-breakthrough-in-3d-reconstruction

- https://www.poppr.be/en/insights/photogrammetry-and-nerf-compared

- https://myndworkshop.com/resources/photogrammetry-vs-nerfs

- https://tokenwisdom.ghost.io/a-comprehensive-exploration-of-nerf-and-gaussian-splatting

- https://medium.com/data-science/a-12-step-visual-guide-to-understanding-nerf-representing-scenes-as-neural-radiance-fields-24a36aef909a

Did you like this post? Read more and subscribe to our monthly newsletter!