Maxar-owned commercial space imagery company DigitalGlobe produces over 1 billion square kilometers of satellite images every year. NASA-USGS operated Landsat earth observation program has more than 8 million scenes in its archive. Even Smallsat manufacturer Planet’s imagery archive has crossed the 7 petabyte mark and is growing handsomely on a daily basis.

Clearly, the size and the scale at which the geospatial industry is producing data today has moved beyond the realm of a bunch of analysts sitting in front of their computers, trying to make sense of the pictures floating on the screens. It’s a task best left to the machines.

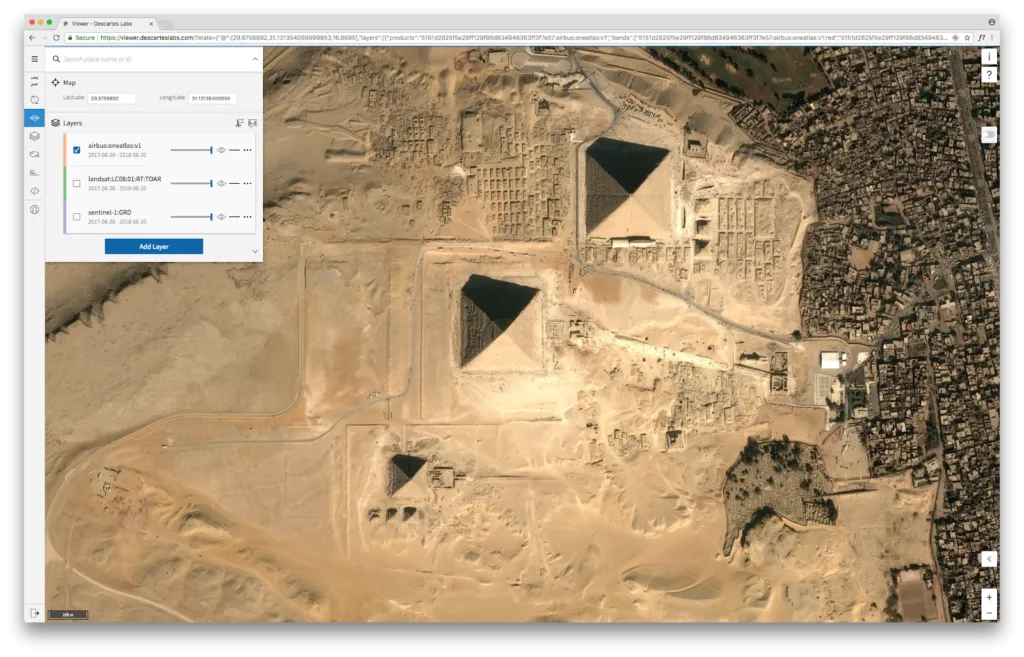

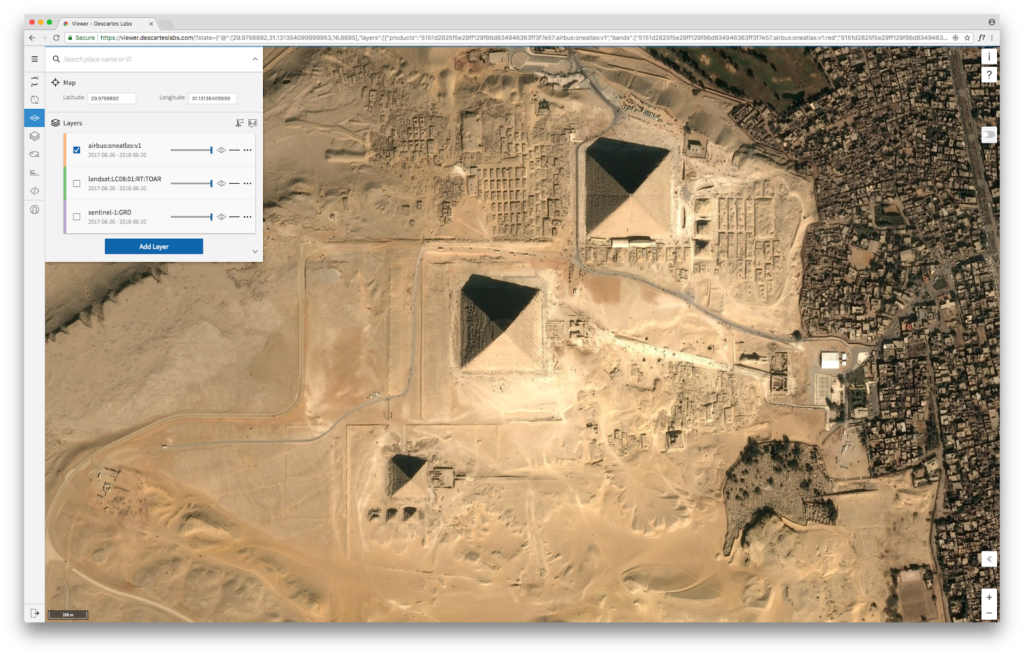

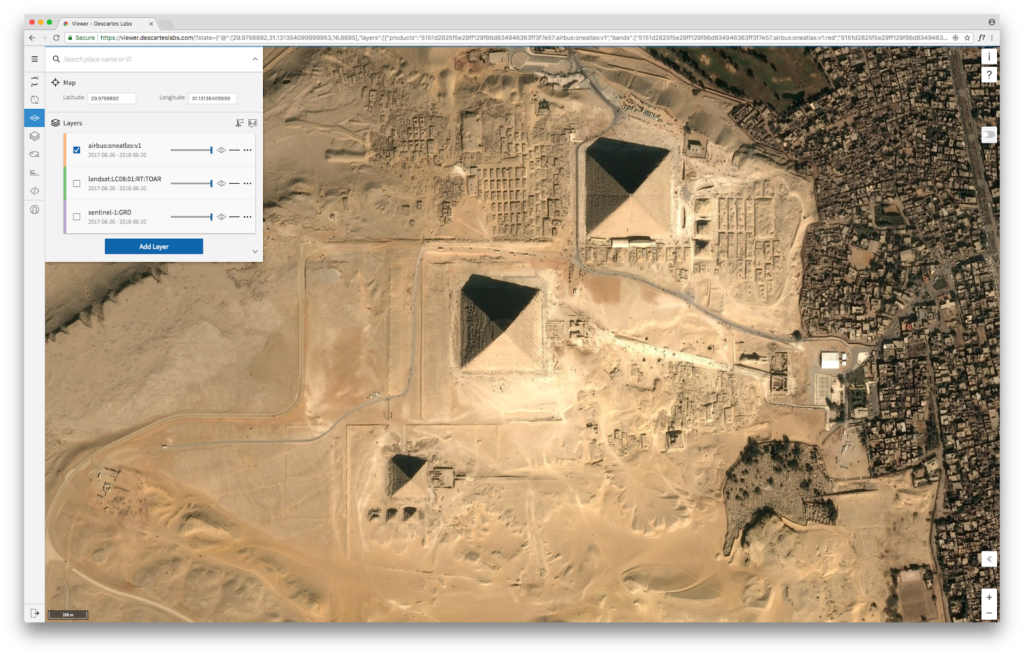

And this is where Santa Fe, New Mexico-based startup Descartes Labs comes into the picture. The geospatial analytics firm uses machine learning to build algorithms that can detect objects and patterns hidden inside hundreds of thousands of satellite images.

As a rather over-simplified example, if you wanted to map all the buildings in a given geographic region, Descartes Labs platform could do that for you in a heartbeat. And we need them to do this for us because, you see, even the most incredible open-source datasets available to us today are simply not complete. The largest open-source mapping dataset, OpenStreetMap, is populated by human volunteers – which means if the open-source community is not active in a particular area, you may be left wanting for data in that neighborhood. This is especially true for developing nations where the OSM community is not as dynamic as in Europe or the United States.

But machine learning alone does not make Descartes Labs unique – there are other players in the industry offering pretty impressive analysis of geospatial datasets using computer vision or even artificial intelligence.

Must read: The perfect storm called artificial intelligence and geospatial big data

Where Descartes Labs really shines is in its approach to building a platform that provides quick and uniform access to mammoth satellite imagery datasets.

So, even if you were to talk about government-facilitated data sources like Landsat or ESA’s Sentinel, which are free and openly-available, you could still face problems stitching a bunch of images together because they may have been tiled differently or have differences in image registering. Descartes Labs platform makes this process completely automated, leaving you with one less mind-numbing step to deal with.

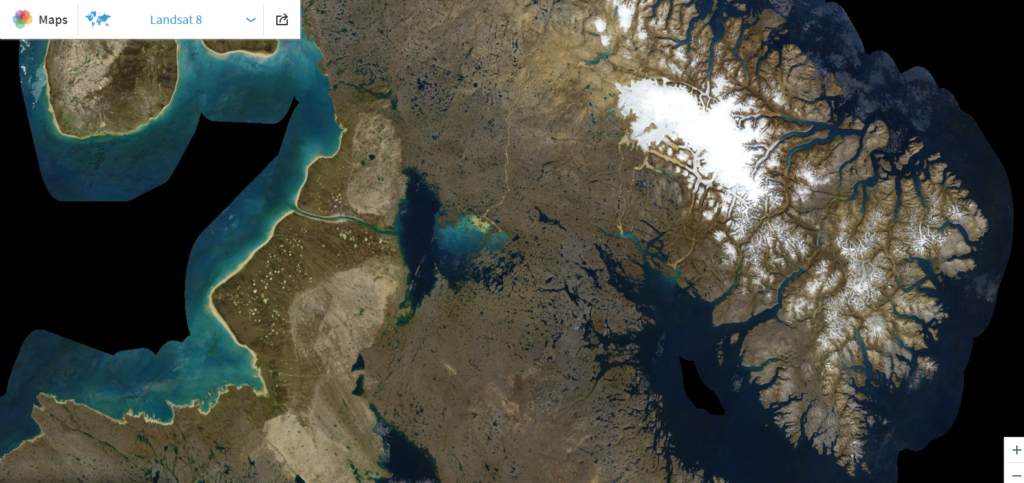

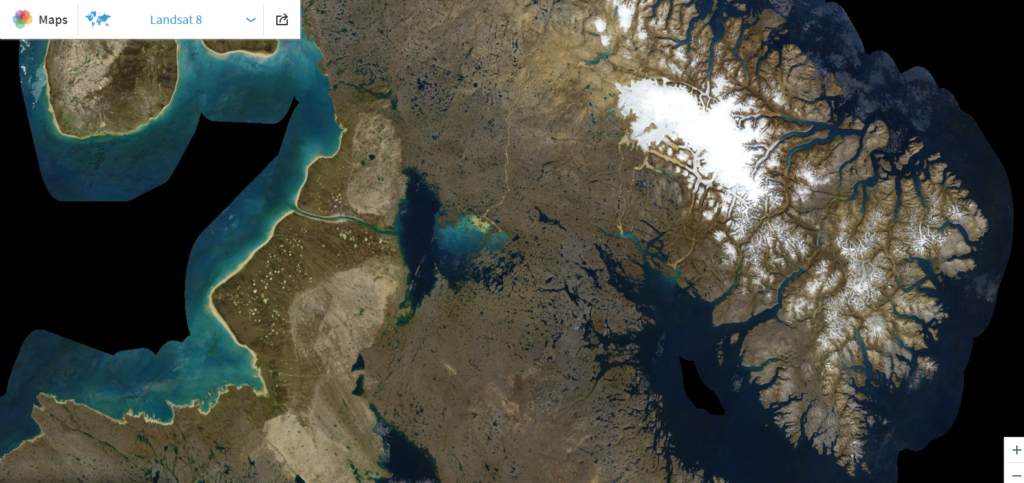

Descartes Labs has created three distinctive global composites – Landsat 8, Sentinel-1, and Sentinel-2 – using each satellites unique image resolutions and frequency bands

The startup also enriches all the images that are uploaded to its platform (they use Google Cloud, by the way) with a lot of metadata. This means you don’t have to waste any time combing the giant databases, looking for just the right images you are interested in.

Descartes Labs has already processed over 11 petabytes of compressed data, and almost 9 terabytes of new data is added to the platform daily. To be more specific, these guys offer the complete library of satellite data from Landsat and Sentinel missions, as well as the entire Airbus OneAtlas catalog. As far as non-satellite data is concerned, the startup has interpolated NOAA’s Global Surface Summary of the Day dataset to make weather data points available as usable rasters on its platform.

If you’re not impressed yet, wait till you read about how the researchers from Los Alamos National Labs used Descartes Labs platform to track the spread of infectious Dengue in Brazil or how DARPA could forecast food security issues in the Middle East and North Africa by analyzing and monitoring wheat crops with their tools.

The company is now rolling out the capability of allowing users to upload their own data to the platform in order to create unique products. This data will be protected in its own “sandbox”, we are told, and integrated into the existing catalog to make fusing datasets smooth.

The company sums up thusly: “We now have the ability to understand and learn from commodity supply chains as living, organic systems. Businesses can see the relationships and impacts of changes across the globe, looking for signals and evidence of real-world trends. We can unlock answers to the world’s most intractable problems connected directly to immediate business and industry challenges. Things that affect us all, like deforestation and sustainable agriculture, can be confronted by delivering a clear view, a way to generate solutions and get ahead of problems with predictive analytics.”