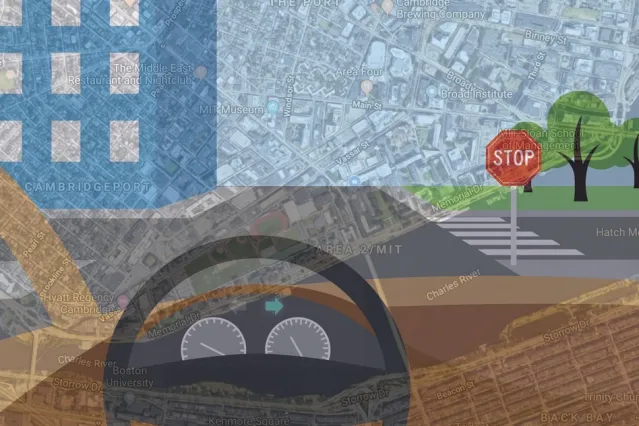

Courtesy: Chelsea Turner

There are more than 60 companies in the United States alone that are developing technologies for self-driving cars. While some are rethinking maps at an unprecedented centimeter-level resolution, others are finding ways to make the sensor rig affordable to the consumer. A team of researchers at Massachusetts Institute of Technology (MIT), meanwhile, is trying to bypass all the fuss by getting autonomous vehicles to mimic human driving patterns – using only simple GPS maps and video camera feeds.

While human drivers can easily navigate in new, unfamiliar locations if they are armed with a basic map, the driverless cars being tested currently rely on computationally-intensive, meticulously-labeled maps. These maps, which have been created from LiDAR scans, are so massive that it takes 4,000 gigabytes of data to store just the city of San Francisco.

MIT says it can get the job done in much less. The maps which its system Variational End-to-End Navigation and Localization is using capture the whole world using only 40 gigabytes of data.

So, how does this thing work?

MIT’s system uses a machine learning model which is commonly used for image recognition. The model trains by observing a human driver steer in real life conditions. To explain the technology better, Daniela Rus, director of the Computer Science and Artificial Intelligence Laboratory (CSAIL) and co-author of the research paper, gives the example of how the system would react to a T-shaped intersection.

“Initially, at a T-shaped intersection, there are many different directions the car could turn,” Rus says. “The model starts by thinking about all those directions, but as it sees more and more data about what people do, it will see that some people turn left and some turn right, but nobody goes straight. Straight ahead is ruled out as a possible direction, and the model learns that, at T-shaped intersections, it can only move left or right.”

The researchers have tested the system with randomly chosen routes in Massachusetts and it has proven successful in recognizing distant stop signs or line breaks on the side of the road as signs of an upcoming intersection. Every time it intercepts an intersection, the system rummages through its steering command database to make a decision just like a human would.

Learn more about the technology the video below and tell us how successful do you think it would be in making self-driving cars a reality in the near future.