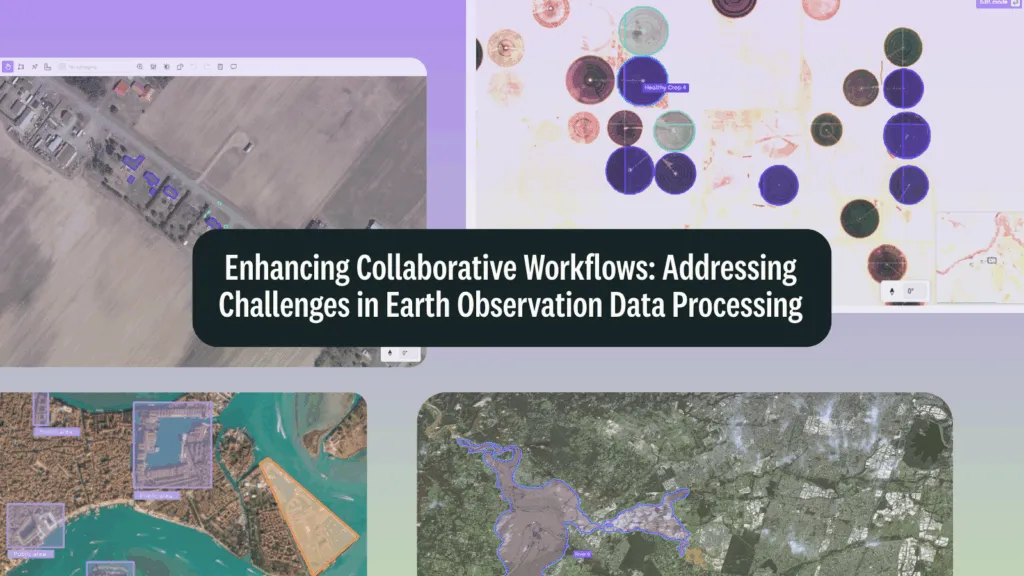

The field of Earth Observation (EO) stands at a critical inflection point. Following recent discussions at the Geospatial World Forum, our comprehensive new report titled “Addressing Earth Observation Data Annotation Challenges” dives deep into the fundamental hurdles preventing us from unlocking the full value of our orbital perspective.

Our planet is now observed by an ever-expanding constellation of satellites generating an unprecedented volume of data. NASA’s EOSDIS repository alone has accumulated over 100 petabytes and is projected to expand nearly sixfold to reach 600 petabytes within five years. With satellites generating over 2 petabytes of data daily from sources like Sentinel and Landsat, processing challenges have become increasingly complex, as highlighted in Geospatial World’s analysis. This wealth of information offers transformative potential across numerous applications – from climate monitoring to urban planning.

However, the path from raw satellite imagery to actionable insight is fraught with significant challenges, particularly in creating high-quality annotated datasets essential for training sophisticated machine learning models. As noted in our report, traditional approaches to data processing have become impractical as the sheer scale of information continues to grow exponentially.

Understanding the “Four Vs” of Big EO Data

Our report frames the challenges through the lens of the “Four Vs” of Big EO Data, providing a comprehensive framework for understanding the multifaceted nature of these obstacles:

Volume: The Scale Challenge

High-resolution satellite scenes commonly exceed 30MB per image, and projects covering substantial geographic regions quickly accumulate into multi-gigabyte or even terabyte-scale datasets. These large files create significant performance bottlenecks in traditional annotation systems, resulting in:

- Sluggish loading times

- Navigation difficulties

- Frequent interface lags

- Inability to effectively examine details by zooming or panning

When annotators need to examine details by zooming into specific regions, conventional tools often struggle to maintain responsiveness. The resulting delays directly impact both the efficiency of the annotation process and the quality of the resulting training data.

Velocity: The Data Stream Challenge

Beyond just the volume, the rate at which new Earth Observation data arrives creates processing challenges. As satellite technology advances, sensors capture imagery with increasingly frequent revisit times, generating continuous streams requiring near-real-time processing.

The conventional approach of downloading complete datasets to local systems before beginning analysis has become impractical due to transmission bottlenecks, introducing delays that undermine the value of time-sensitive applications. Recent research points to “a need for datasets specifically tailored for spatio-temporal ML methodologies, aiming to train ML methods to forecast and explain the impacts of extreme events,” according to Nature Scientific Data.

Variety: The Heterogeneity Challenge

Earth Observation data encompasses an extremely diverse ecosystem of information sources across multiple sensor types – optical, radar, thermal, and hyperspectral – with widely varying:

- Spatial resolutions (from sub-meter to kilometers)

- Temporal frequencies

- Spectral bands

- File formats (GeoTIFF, netCDF, HDF5, and proprietary formats)

This incredible variety creates substantial obstacles when attempting to integrate or compare information across different observation systems. The heterogeneity of EO data presents significant preprocessing challenges for machine learning engineers. Satellites provide imagery ranging from 500m (MODIS) to sub-meter (WorldView) resolutions, each with different file formats that require specialized processing pipelines, as detailed in this Geospatial World analysis.

According to researchers in Earth Science Informatics, “Without a well-defined, organized structure they face problems in finding and reusing existing data, and as consequence this may cause data inconsistency and storage problems.” This technical complexity includes dealing with specialized reference systems and scaling up processing to large amounts of data.

Veracity: The Quality Challenge

The trustworthiness and reliability of Earth Observation data presents another layer of challenge. Raw satellite imagery is susceptible to numerous quality issues:

- Sensor imperfections

- Atmospheric conditions

- Geometric distortions during acquisition

- Artifacts introduced during processing

When creating training datasets for machine learning applications, additional veracity challenges emerge, including class imbalance problems and difficulties in obtaining sufficient high-quality annotated examples. These factors can significantly impact the reliability of analytical results.

The International Organization for Standardization (ISO) defines five quantitative spatial data quality elements: completeness, logical consistency, positional accuracy, temporal accuracy, and thematic accuracy as discussed in PLOS Neglected Tropical Diseases. However, the same research found that “extensive use is made of EO products in the study of NTD epidemiology; however, the quality of these products is usually given little explicit attention.”

Recent research published in Nature Communications highlights that “ignoring the spatial distribution of the data led to the deceptively high predictive power of the model due to spatial autocorrelation (SAC), while appropriate spatial model validation methods revealed poor relationships.” This means that unaddressed spatial dependencies between training and test datasets significantly affect model generalization capabilities.

Beyond the Four Vs: Additional Critical Challenges

The Precision Challenge

Annotating satellite imagery requires exceptional precision to accurately capture the diverse and often complex features present in Earth Observation data. Unlike everyday photographs, satellite imagery contains specialized geographical information and often requires domain expertise to interpret correctly.

Features like urban structures, agricultural fields, forest boundaries, or disaster impacts must be delineated with high accuracy to create training data that enables effective machine learning models. Without access to geographic coordinate systems, distance measurements, or tools designed specifically for geospatial contexts, annotators may struggle to accurately identify and annotate features of interest.

Domain expertise is crucial, with some studies finding that misclassification occurs “because the labelers had misclassified what to identify as rubble in the images, which may have happened because the labeling process was undertaken by non-experts who lacked the necessary understanding,” as noted in our research.

The Multi-Spectral Challenge

One of the most powerful but challenging aspects of Earth Observation data is its multi-spectral nature. Unlike standard RGB photography, satellite sensors can capture information across numerous wavelength bands, including those outside the visible spectrum.

This multi-spectral data enables the analysis of invisible characteristics and conditions. For instance, in agriculture, different bands can indicate plant health, moisture content, or pest stress before such conditions are visible to the human eye, enabling early intervention. However, working with multi-spectral imagery presents significant challenges for annotation workflows, as annotators must be able to view and switch between different spectral bands to identify features that may be apparent in one wavelength but not in others.

Recent approaches recognize the importance of creating “harmonised datasets tailored for spatio-temporal ML methodologies,” according to Nature Scientific Data, and developing “cost-efficient resource allocation solutions… which are robust against common uncertainties that occur in annotating and extracting features on satellite images,” as highlighted by the IET Digital Library.

The integration of multiple data sources presents challenges in bias correction, with research from Springer noting that “if the biases are not corrected before data fusion we introduce further problems, such as spurious trends, leading to the possibility of unsuitable policy decisions.”

The Collaboration Challenge

Earth Observation projects often involve diverse stakeholders including government agencies, research institutions, non-governmental organizations, and private sector entities. In our report, we identify several barriers to seamless collaboration:

- Technical incompatibilities (diverse data formats, inconsistent metadata)

- Restrictive data policies limited by national laws or institutional regulations

- Intellectual property concerns regarding ownership of annotations

- Lack of governance frameworks establishing clear rules for data access

- Trust and awareness issues between potential collaborators

- Capacity and resource gaps, particularly for organizations in developing nations

These collaboration barriers directly impact annotation quality and efficiency, as teams struggle to coordinate efforts, share knowledge, and maintain consistent standards across different annotators and geographic regions.

Spatial-Temporal Complexity: A Fundamental Challenge

A critical aspect highlighted in recent research is the unique nature of spatiotemporal data mining. Earth observation data points are not independent and identically distributed, violating fundamental assumptions of classical machine learning approaches, according to research from NIH. Environmental processes exhibit dynamic variability across space and time domains, creating what researchers call “spatial heterogeneity and temporal non-stationarity.”

This intrinsic spatial-temporal complexity requires specialized methodologies. The workflow often requires extensive preprocessing stages, with some researchers proposing structured pipelines for “enhancing model accuracy, addressing issues like imbalanced data, spatial autocorrelation, prediction errors, and the nuances of model generalization and uncertainty estimation,” as highlighted in Nature Communications.

Key Requirements for Effective EO Data Processing

Our report highlights several fundamental requirements for addressing Earth Observation data challenges:

Technical Requirements

The increasing complexity of EO data demands specialized approaches to processing and annotation. According to our report, effective systems must address scalability concerns through optimized handling of massive imagery files, preserve geographic context during annotation, support multi-spectral data visualization, and enable quality control mechanisms that ensure data reliability.

Traditional image annotation software may not support direct use of geospatial metadata embedded within images, making it challenging for users to work with GeoTIFF files effectively, as noted in our analysis. A significant challenge is making Earth observation data “analysis-ready” – meaning properly preprocessed, structured, and formatted to enable direct analysis without extensive technical preparation.

Process Requirements

Beyond technical capabilities, our report emphasizes the importance of collaborative workflows that facilitate knowledge sharing between domain experts and annotators, standardized methods for quality assessment, and security frameworks that protect sensitive geospatial information.

These requirements align with established ISO standards for spatial data quality, which define quantitative elements including completeness, logical consistency, positional accuracy, temporal accuracy, and thematic accuracy.

Emerging Solutions and Hybrid Approaches

Researchers increasingly recognize the need for hybrid approaches that combine physical models with data-driven machine learning. Nature’s research suggests that “the next step will be a hybrid modelling approach, coupling physical process models with the versatility of data-driven machine learning.”

This combines the theoretical understanding from physics-based models with the pattern-recognition capabilities of machine learning, particularly for addressing complex Earth system challenges through deep learning methods that can automatically extract spatial-temporal features.

Recent developments to address these challenges include:

- Data cube architectures that provide standardized access to analysis-ready data

- Specialized geospatial annotation platforms with coordinate reference system support

- Tools that enable visualization and annotation across multiple spectral bands

- Foundation models like SAM2 to accelerate annotation processes

- Quality control systems with consensus mechanisms and programmatic validation

- Spatiotemporal ML methodologies designed specifically for Earth observation data

- Reproducible data processing pipelines that combine multi-modal data

Applications and Future Directions

Real-World Applications Highlighted in Our Report

Our report presents several case studies demonstrating the real-world impact of advanced geospatial data processing:

- Disaster Response: The xBD dataset project for building damage assessment from post-disaster satellite imagery, showcasing the importance of standardized annotation frameworks across different disaster types

- Environmental Mapping: The Global Ecosystems Atlas Project, creating comprehensive maps of marine, terrestrial, and freshwater ecosystems in the Maldives

- Agriculture: Crop monitoring applications utilizing multi-spectral data to detect plant health issues and pest infestations before they become visible to the human eye

These applications illustrate how overcoming EO annotation challenges translates to tangible benefits across critical domains.

Future Trends

Looking forward, our report identifies several emerging directions in geospatial data processing, including AI integration through initiatives like ESA’s Φ-lab, efforts to bridge the digital divide in EO capabilities, multi-modal data integration, edge computing approaches, and advanced privacy protections as imagery resolution continues to increase.

The Path Forward

The insights presented in our report underscore a fundamental truth: the future of Earth Observation depends on our ability to effectively manage and utilize the vast amounts of data we collect. By implementing the capabilities outlined in this research, organizations can overcome the critical challenges in geospatial data processing and annotation, creating higher-quality training datasets that enable more robust and effective machine learning models.

These advancements are not merely technical achievements but essential steps toward harnessing the power of Earth Observation to address some of humanity’s most pressing global challenges – from climate change monitoring and disaster response to sustainable agriculture and urban planning.

For a deeper dive into these challenges and solutions, our full “Addressing Earth Observation Data Annotation Challenges” report serves as a valuable guide for understanding the landscape of geospatial data processing and the essential capabilities required to transform the potential of Earth Observation into tangible impact.

About Kili Technology

Kili Technology began as an idea in 2018. Edouard d’Archimbaud, co-founder and CTO, was working at BNP Paribas, where he built one of the most advanced AI Labs in Europe from scratch. François-Xavier Leduc, our co-founder and CEO, knew how to take a powerful insight and build a company around it. While all the AI hype was on the models, they focused on helping people understand what was truly important: the data. Together, they founded Kili Technology to ensure data was no longer a barrier to good AI. By July 2020, the Kili Technology platform was live and by the end of the year, the first customers had renewed their contract, and the pipeline was full. In 2021, Kili Technology raised over $30M from Serena, Headline and Balderton.

Today Kili Technology continues its journey to enable businesses around the world to build trustworthy AI with high-quality data.