While Google Maps’ popular times and live busyness insights have been saving us from unnecessary stress and helping us make better plans since 2016, this information has transformed into an essential tool for many during the ongoing COVID-19 pandemic.

Google says between March and May this year, users’ engagement with these features increased by 50 percent as more people compared data to find the best ‘social distancing-friendly’ days and times to visit a store. This is why Google is now taking steps to do away with all the extra tapping and scrolling that is typically required to check the live busyness index of a place.

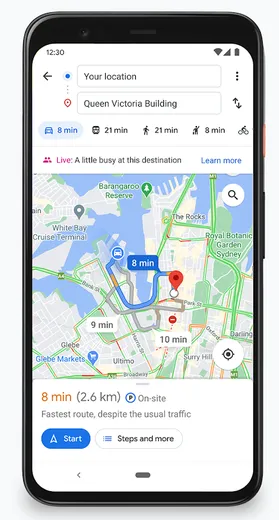

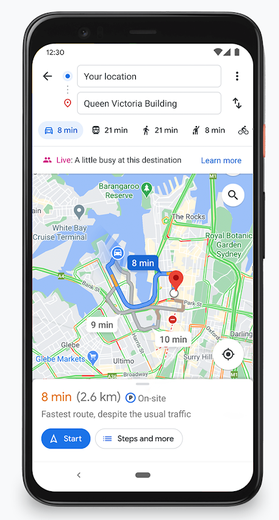

As you can see in the image here, live busyness information of your destination will be displayed directly on the screen when you’re getting directions on Google Maps.

Google is also on track to increase global coverage of live busyness data by five times compared to June 2020. This expansion includes more outdoor areas, such as beaches and parks, and essential places, like grocery stores, gas stations, laundromats, and pharmacies.

Understanding that the pandemic has brought about many changes to businesses’ offerings, Google has also been leveraging AI tech to ensure that the information you see on Google Maps is up-to-date. Google Duplex, a system that enables people to have a natural conversation with computers, has been calling businesses and verifying their updated hours of operation, delivery and pickup options, and store inventory information for in-demand products such as face masks, hand sanitizer, and disinfectant.

Google says this technology has enabled more than 3 million updates to Maps since April 2020. And soon, Google Maps users will also be able to contribute to useful information such as whether a business is taking safety precautions and if customers are required to wear masks and make reservations.

Interestingly, Google Maps’ AR-powered Live View feature is also going to help users get around safely. How? Let’s say you’re walking around a new neighborhood, and one boutique in particular captures your attention. You’ll now be able to use Live View to learn quickly if the place is open, how busy it is, its star rating, and health and safety information, if available. Something like this: