Making Geospatial More Approachable Through Clear Documentation: Meet UP42’s Documentation Lead, Daria Lutchenko

Editor’s note: This article was written as part of EO Hub – a journalistic collaboration between UP42 and Geoawesome. Created for policymakers, decision-makers, geospatial experts and enthusiasts alike, EO Hub is a key resource for anyone trying to understand how Earth observation is transforming our world. Read more about EO Hub here.

The geospatial industry can seem overwhelming, filled with complex terms and concepts that can often intimidate beginners. Clear and concise documentation is essential for making geospatial technology more approachable. It simplifies complex concepts, allowing users to quickly understand and utilize geospatial tools and data without feeling overwhelmed.

Daria Lutchenko works on this every day. As UP42’s Documentation Lead, her main goal is to explain things clearly, so that “even a beginner feels empowered to explore this beautiful world of geospatial data and the opportunities it presents.” Her words, not ours!

We spoke to Daria to find out more about democratizing access to geospatial data and analytics at UP42, and the role that documentation plays:

Q: Thanks for joining us, Daria! Could you tell us more about your journey to becoming Documentation Lead at UP42? What inspired you to go down the technical writing path?

A: No one ever knows what technical writing is when I tell them I’m a technical writer. For me, it was something I stumbled into by chance. But when I learned more about technical writing, I realized it was something I’d been doing in my head my whole life—like thinking of better ways to structure a board game rulebook, or wondering why they crammed ten sentences into one paragraph instead of splitting or simplifying it. I’ve never had much patience for wading through overly complicated text, so I feel at home making ideas more accessible.

Q: We loved reading your article on the art of documentation in the geospatial industry. How do you believe clear, concise, and consistent documentation makes a difference in the lives of those who use geospatial data? What are the main barriers beginners face when getting started with geospatial technology?

A: Similar to the point I just raised, people have far more important things to do than wander through a jungle of words. Getting to the point as fast as possible can make all the difference in whether people choose your product. The geospatial industry is especially guilty of this because of the sheer complexity of the source material. There’s only so much you can simplify when it comes to satellite specifications, for example. But I still believe it’s our responsibility—those of us who want to attract new people to the industry—to break down that complexity, so that people can use it in their respective work fields.

Q: Why do you think it’s important that geospatial documentation is approachable? What are the potential benefits and real-world impacts?

A: When documentation is easy to navigate and understand, people can focus on solving real-world problems instead of grappling with complex concepts. Clear documentation empowers people to turn to geospatial data for the insights they need in their industries. If we don’t explain how it works first, the adoption itself might never happen. So making it understood is the first step in this process.

Q: How does UP42’s approach to documentation align with its mission of democratizing access to geospatial data and tools?

A: I think of our documentation principles as a continuation of the “democratizing access” approach. You can’t achieve democratization without inclusivity, and that, in part, starts with the documentation—with the terms you use and your approach. Whether you gatekeep, even unintentionally, or proactively try to be approachable and comprehensive in your communication style, it makes a difference. Ultimately, it’s about ensuring that those who want to learn more about geospatial, can do so, regardless of how technical or experienced in the industry they are.

Q: UP42’s mission aligns perfectly with the industry’s need for democratizing access to geospatial data and tools, especially as the field becomes increasingly important in addressing global challenges such as climate change and disaster management. Can you share an example of how UP42’s documentation has simplified a particularly challenging geospatial concept?

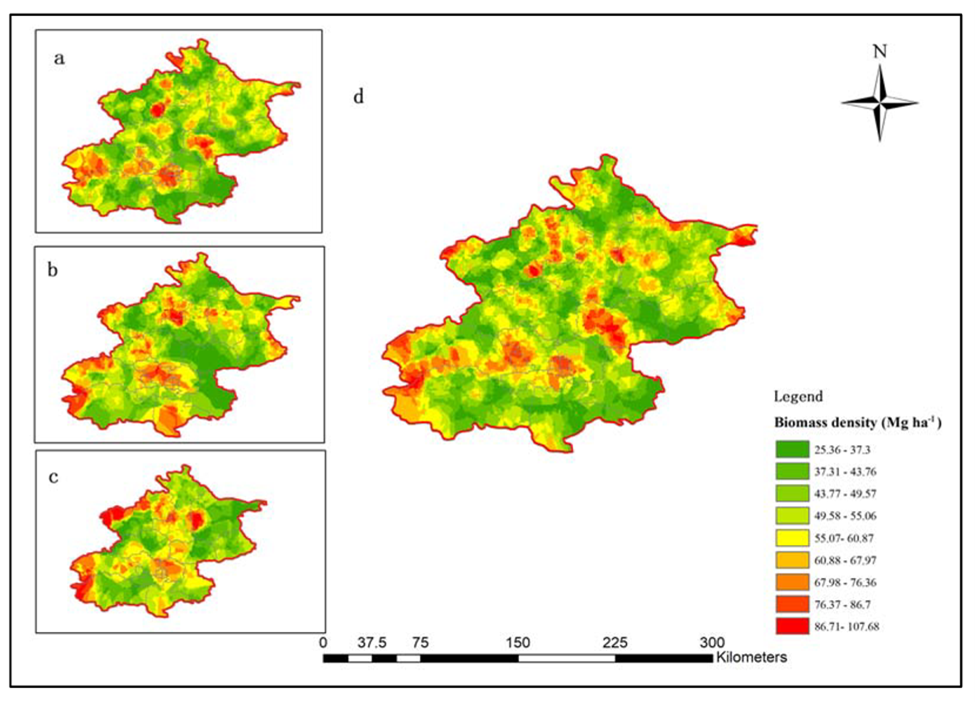

A: It was a challenge to explain the differences in processing levels because providers use their own terminology and naming conventions. And at UP42, being positioned between providers and customers, we needed to find a way to create a unified leveling system. We spent some time going through provider manuals and research papers, and discussing our ideas with experienced colleagues. In the end, we came up with a leveling system and introduced matrix tables on our collections’ pages to provide users with an understanding of the processing levels associated with each data product.

Q: How do you address the varying needs of different user groups (such as developers, analysts, and decision-makers) in your documentation? For example, how do you balance providing technical details with maintaining clarity for non-expert users?

A: It’s easy to do with UP42 products because we offer different access options, tailored for different audiences. The API and SDK are designed for developers, while our console—the graphical interface—is intended for decision-makers. The console provides an overview of our products and offerings but is also suited for ordering itself, for those users who don’t need to build ordering pipelines with developer tools. So by following this approach in our documentation, the separation of audience flows comes naturally: we have API and SDK references and tutorials for developers, and console instructions for those using the graphical interface.

Q: We know how important it is to adhere to global English principles so that documentation is accessible and comprehensible to a diverse audience—including non-native English speakers or those unfamiliar with the industry. Are there any other best practices you follow in your documentation to ensure approachability?

A: We’re user advocates above all, so I think the first step would be to adopt this mindset, and everything else will follow. We have a few formalized principles. For example, we don’t just describe what our products can do, but instead explain how they can benefit the reader. I’ve already mentioned the principle of being mindful of people’s time—we achieve this by getting to the important thing fast, starting with the key takeaway. Another principle, a favorite of mine, is “function over form”. Basically, this means that when you’re presented with a choice between something that looks better and something that’s more accurate text-wise, you should always go with the more accurate option. Meaning is always more important than design.

Q: How does UP42 gather feedback on its documentation and incorporate user suggestions for improvement?

A: We regularly meet with the Support team to go over their tickets and find areas for improvement. The idea is to provide answers in the documentation upfront, to reduce support requests. We all suffer from the “curse of knowledge”—when you know the product, it’s hard to imagine what will be unclear to readers—so exercises like this help with getting out of your comfort zone.

Did you like the article on Making Geospatial More Approachable Through Clear Documentation? Read more and subscribe to our monthly newsletter!