Drones that can see through walls using only Wi-Fi

A Wi-Fi transmitter and two drones. That’s all scientists need to create a 3D map of the interior of your house. Researchers at the University of California, Santa Barbara have successfully demonstrated how two drones working in tandem can ‘see through’ solid walls to create 3D model of the interiors of a building using only, and we kid you not, only Wi-Fi signals.

A Wi-Fi transmitter and two drones. That’s all scientists need to create a 3D map of the interior of your house. Researchers at the University of California, Santa Barbara have successfully demonstrated how two drones working in tandem can ‘see through’ solid walls to create 3D model of the interiors of a building using only, and we kid you not, only Wi-Fi signals.

As astounding as it sounds, researchers Yasamin Mostofi and Chitra R. Karanam have devised this almost superhero-level X-ray vision technology. “This approach utilizes only Wi-Fi RSSI measurements, does not require any prior measurements in the area of interest and does not need objects to move to be imaged,” explains Mostofi, who teaches electrical and computer engineering at the University.

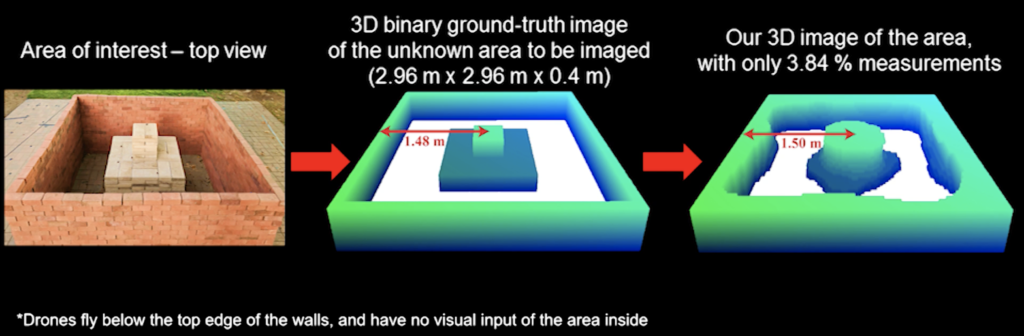

Here’s what the researchers did: They flew two UAVs in synchronous paths outside a closed, four-sided building whose prior measurements were not known to the drones. While one drone kept transmitting the Wi-Fi signal, the other drone recorded the power of the signal. By measuring the variations in signal strength, the dimensions of the objects inside the building could be ascertained to draw detailed 3D images.

Related: Google is testing indoor positioning technology through Tango devices

Even though Mostofi concedes that imaging real areas could be much more challenging due to the considerable increase in the number of unknowns, it is interesting to note that the researchers were able to get near-perfect results in their experiments by using less than 4% Wi-Fi measurements. With a higher number of measurements, the efficiency of the technology should go up significantly.

As such, this technology can have a huge impact in emergency search-and-rescue situations where first responders should be able to figure out what’s inside a building without risking their lives. The other possible applications could come from the fields of archaeological discovery and structural monitoring.

Check out the video below to see the exact approaches the researchers followed to achieve this 3D through-wall imaging: