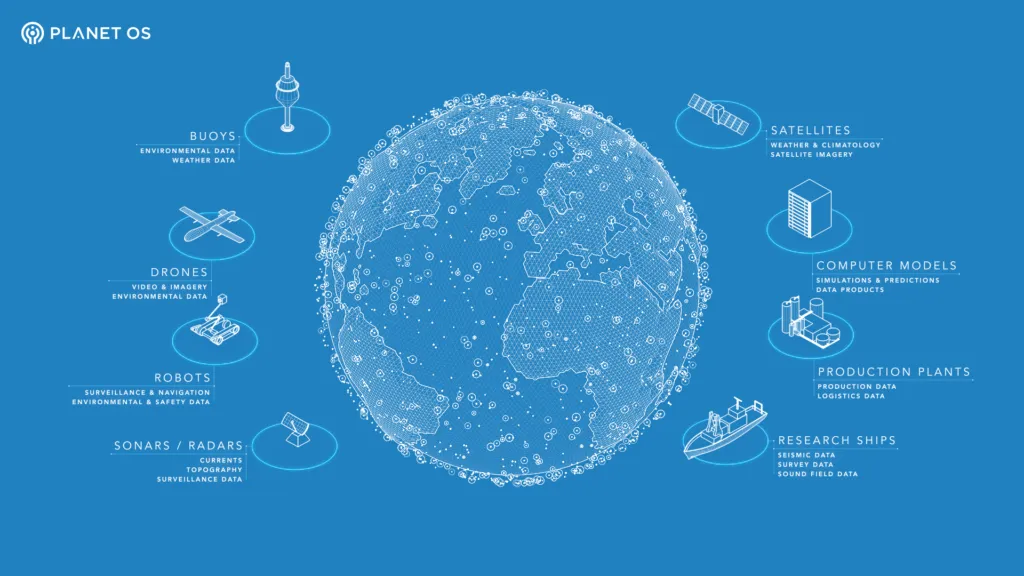

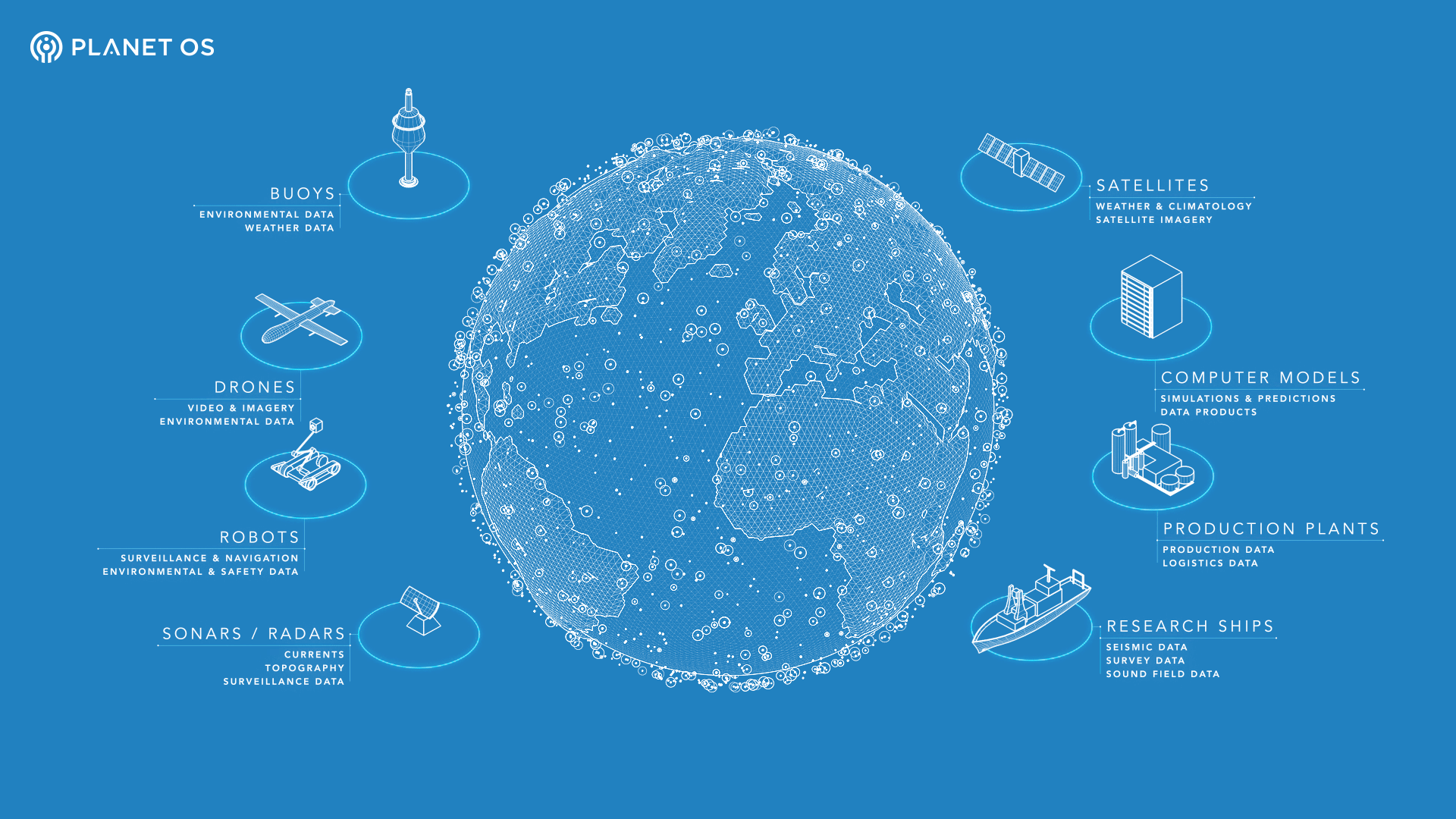

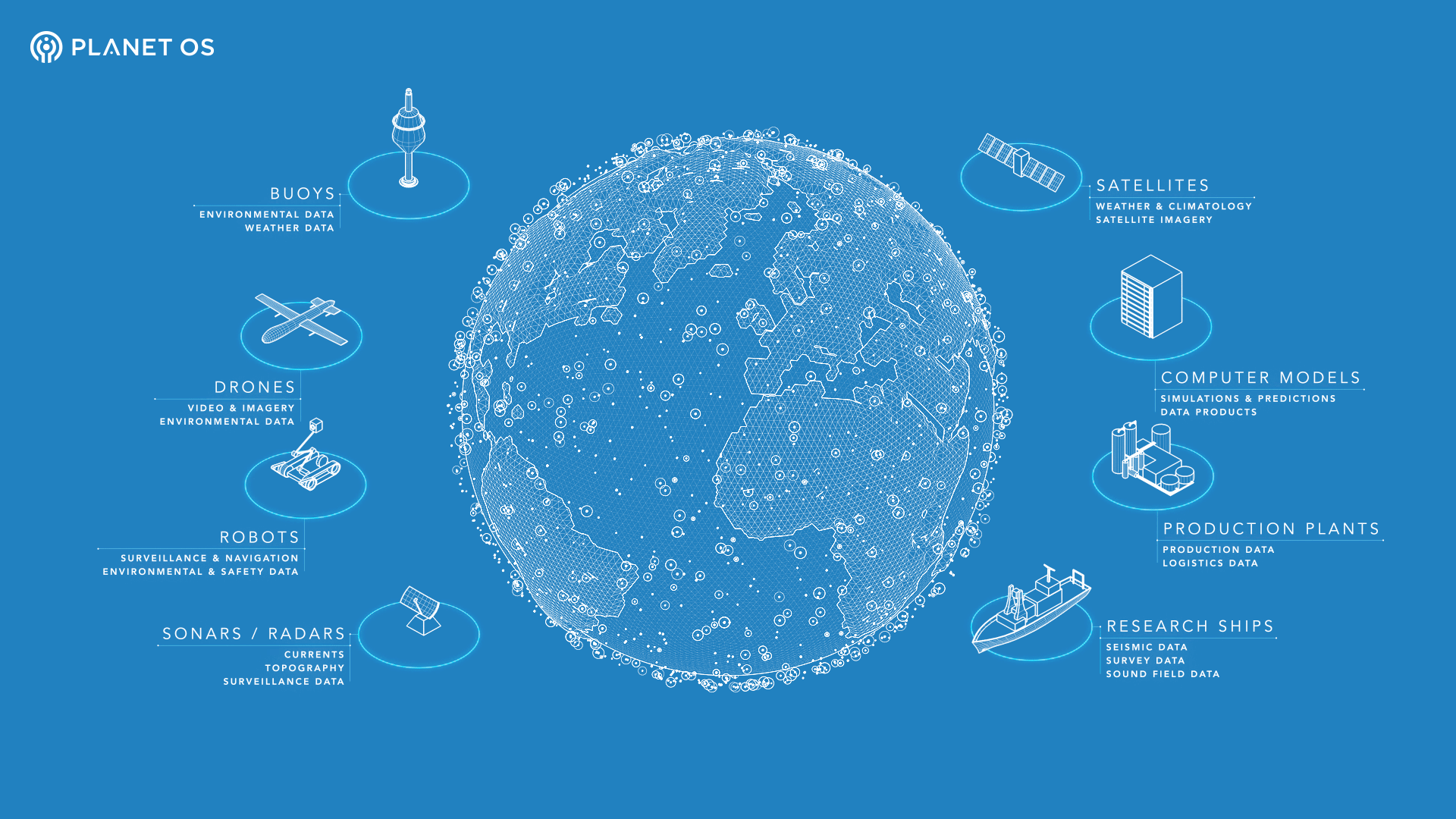

The California based startup PlanetOS is on a mission to connect all the Geospatial sensors in the world – in the oceans, in the atmosphere, on land and in space i.e. big data platform for analysing & discovering data about our planet. In other words, they want to build an operating system for the world!

Planet OS’s goal is to index the public web for geospatial sensor datasets and become the first place every data professional or executive will use to look for sensor data intelligence. – PlanetOS website

We were really excited to talk to their CEO Rainer Sternfeld in a free-winded chat about PlanetOS and his vision for the company. Here’s the summary.

Planet OS – an operating system for the entire world! How do you create an operating system for the planet? What exactly are you guys working on?

We live in a world where geospatial sensor networks are proliferating at an unprecedented rate, especially in situ sensors deployed from weather stations to drones and imaging technology from drones to nanosatellites. At the same time, there is a growing amount of industries becoming data-driven, from weather/climate forecasting to energy to agriculture and insurance. All of them need more than one type of data in their analysis. The problem is, the diversity of the data types, access protocols and formats makes it impossible to store all of this in one-size-fits-all database, so someone need to be the integration layer for that data. Someone, who has a good understanding of the physical world and the digital world, and can provide secure data hubs to those enterprises, with features like data access, data discovery of open and commercial data, publishing, etc.

Once the data integration and data hub layers exist, it becomes relatively easy to derive meaningful data intelligence from the aggregated data. That’s the gap that we are trying to fill – to become the data infrastructure for our customers, along with access to external content they are not normally exposed to. In short, Planet OS is a cloud-first data infrastructure designed for geospatial sensor networks, Earth Observation and Environmental Intelligence application.

IoT is everywhere and is being used in several different applications. Why did you guys start with sensors from the Ocean. Why environmental data?

Back in 2009, my co-founder Kalle Kägi and I were involved in a project to design and build a data buoy, profiling phytoplankton concentration in the Gulf of Finland. Since I had an EOD robots, electric car charging and enterprise software background, I wasn’t satisfied how long it took our customers to process and visualize the data (3 months!). Just about any data scientist, system integrator or software developer we spoke to in Europe in spring of 2011, was having problems with finding, accessing and processing data.

So in February 2012, we decided to found Marinexplore, and started to build the very first big data platform designed for oceanic industries. To accelerate the learning process, we started a free service called Marinexplore.org, which allowed us to test everything we built along with data from over 40,000 devices coming from 35 institutions worldwide.

It was around December 2013 when it became clear that when you’re working for the ocean community that (a) you’re working with the widest variety of data that covers all the data types land-based use cases use as well; (b) we could make a significantly bigger impact by narrowing our offering but doing so for all environmental intelligence, since weather/climate continued to become more unpredictable and enterprises with real-world applications such as wind farms, jet engines, power plants, coast guards, etc. were increasingly exposed to this external risk, without being able to quantify that risk and take action against it. This is why the combination of geospatial sensor data and environmental intelligence is so important.

Isn’t the sensor data problem mainly a scientific issue at the moment? What kind of commercial companies are you working with currently?

Your readers probably know that these days, heavyweight applied science is done between the walls of the private enterprise. Companies building new sensors, instrumentation and data processing methods invest heavily into streamlining the data feeds. Naturally, it all started in partnership with the governments and then moved into areas where assets under risks were the most valuable or influential to bigger communities. This is why we at Planet OS focus on energy and the government. Although the use cases and value proposition for them is different, they work with the same data from the same devices.

Planet OS is working or has worked with power companies such as RWE (running a dashboard for the second largest offshore wind farm in the world), a large power and automation corporation, industrial vessel companies such as Bravante (with end users like BP, Total, Chevron, etc.), weather forecasting companies, but also government agencies such as NOAA and NASA/BAERI.

How is spatial big data processing different from other types of big data processing, besides the location tag? What exactly is big data in the first place! Hasn’t Spatial data always been difficult to process with traditional data processing systems?

The explosion of location-based sensing is changing the way we think about spatial data acquisition, processing, and analysis. First of all, like in all fields, we have had a huge growth in the amount of data available that pushes us out of traditional databases and data warehouses. Second, we’re handling data in a variety of very different formats – point data from stationary or mobile sensors, gridded data from satellites and forecasts, radial data from radars, etc. Third, when handling data from a variety of sources, metadata, or data about data, can be a challenge since it’s used differently between fields and unevenly by practitioners within fields.

For example, a particular challenge in spatial data is normalization between data sets covering the same geographic regions but with different coordinate system or polygonal breakdowns.

In the case of Planet OS, we are building a data infrastructure for providing service. As a result, we work with data in a variety of different forms and keep the data in the most appropriate system. Often this means we maintain specially formatted files and keep only metadata in the databases because this will allow us to find the data that users request quickly and assemble the exact data needed for processing based on what the user needs. With a free account, users can perform up to 500 API calls per day and transfer 5 GB of data per month. We also provide an enterprise solution for customers whose needs exceed that limit. Interested readers can sign up here.

Yesterday you launched the Planet OS API, could tell us more about it? ie. What datasets one can find find, the pricing, you know, the entire summary!

There is a tremendous wealth of geospatial data that’s produced by the global community, but the variety and complexity of the available interfaces can make working with this data difficult. This is particularly true when working with multiple data types or data sourced from multiple organizations.

Our goal with the Planet OS API is to provide a common, programmatic interface to disparate Earth observation datasets. Yesterday we released our RESTful interface for point queries, which allows users to request data at a specific latitude and longitude and returns a JSON formatted response containing a time-series of values. This is only the start however, and we have some exciting plans to expand the API’s features and functionality in the future.

In parallel, through a mixture of automated discovery and user recommendation, we’re building a curated catalog of open and commercial datasets that will be serviced by our API. Users can already freely access three global forecast models – NOAA WaveWatch III, NCEP Global Forecast System (GFS), and HYCOM Global Ocean Forecast – and we plan to frequently update the catalog with new datasets moving forward.

With a free account, users can perform up to 500 API calls per day and transfer 5 GB of data per month. We also provide an enterprise solution for customers whose needs exceed that limit.

In some sense, the business model of Planet OS does also include being a data marketplace. How difficult has it been to get other companies to sell their data on the platform?

First, a few modelers from two commercial data companies (that were users of Marinexplore) started to ask about whether they could include their data on the site as well. We learned that their problems are in automating sales (a lot of manual work on creating a custom dataset for each order), pricing management and above all – visibility. We created a complete checkout flow for that along with Stripe integration, purchase authorization and pricing management.

At the same time, before we commit, we also would like to know whether the data that will be sold on Planet OS is popular or not. So the best win-win situation was to start signing up companies that would be interested to expose their data products’ metadata and gauge the relative popularity and preferences of their data products among the visitors and users of Planet OS. So far we have signed up 20 commercial data vendors with focus on weather/climate forecasting, geological and environmental data. We are encouraging all data vendors to get in touch and we’d love to talk about how there could be a fit and how we can help them to grow.

This is totally geoawesome! We are big fans already! Can you share with our readers what are your plans for the next couple of months?

For the public data hub, Planet OS will continue to put out APIs for more open datasets. We’d love to see what people build with them. We believe that accessing data should be as simple as hitting a light switch and it shouldn’t be a showstopper for creativity, especially among app developers that want to use the best data for their application. In addition to that, we’ll be introducing more and more commercial data vendors that we already have in the pipeline. Come and check them out at data.planetos.com!

On the enterprise side, we will continue working on delivering our data intelligence solutions for the energy industries and enterprise data hub solutions in the U.S., Europe and Brazil.

Here’s to Rainer and his entire team at PlanetOS! We are really excited to see what the future holds for PlanetOS and wish you guys the very best! #Geoawesomeness