New high resolution mosaic from the Arctic and Antarctic

There is new notice from the sixth and ‘seventh’ continent: The Polar Geospatial Center (PCG) at University of Minnesota has accomplished a high resolution mosaic from the Arctic and Antarctic showing the poles at an unprecedented detail. Since the launch of the LANDSAT mosaic of Antarctica six years ago (LIMA), which employed the panchromatic mode on a sub-pixel level for achieving a resolution of 15m, the latest product has a never seen horizontal resolution of around half a meter applying imagery of commercial satellites such as WorldView-1, WorldView-2, QuickBird-2 and GeoEye-1.

One of the biggest challenges was to cope with the high number of images, over 30000, from different companies requiring the correction for the orientation and the automatisation of ‘orthorectification’, in order to obtain one single mosaic. The images are implemented in web-based mapping applications of the National Science Foundation for viewing high-resolution, commercial satellite imagery mosaics of the polar regions. Since the launch of the new bedrock topography of Antarctica ‘bedmap2’ in spring this year it’s the second important remote sensing achievement within short time.

According to PCG director Paul Morin those images reveal every penguin colony and every glacier crevasse. PCG Imagery Viewers are accessible to any researchers who have US federal funding. The imagery is licensed for federal government use and is not available to the general public. Other requests involve specific requirements and will be linked to a certain purpose and date. In general, the benefit goes to scientific and governmental activities.

The new imagery collection obviously will be beneficial for science such as climate research and glacier studies. As the spatial resolution permits to even detect penguin colonies, ecological research will also profit from the images as for instance the number of animal groups can be counted better than ever before. At this point I want to stress that knowledge extracted from research always implies to be aware of new (political) responsibilities. I am pointing to the soaring interest of adjoining states in Arctic oil reserves and the environmental problems that have to be linked to this topic. Therefore, the new level of information (PCG maps, bedmap2,…), which is going to be beneficial for different questions and parties, needs a strong discussion about the usability and potential consequences of practical applications.

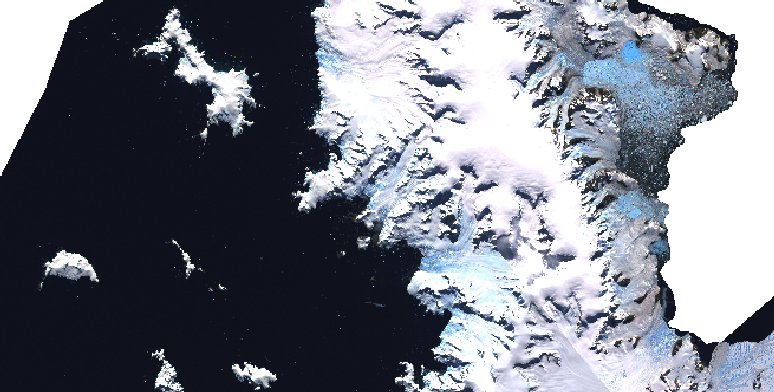

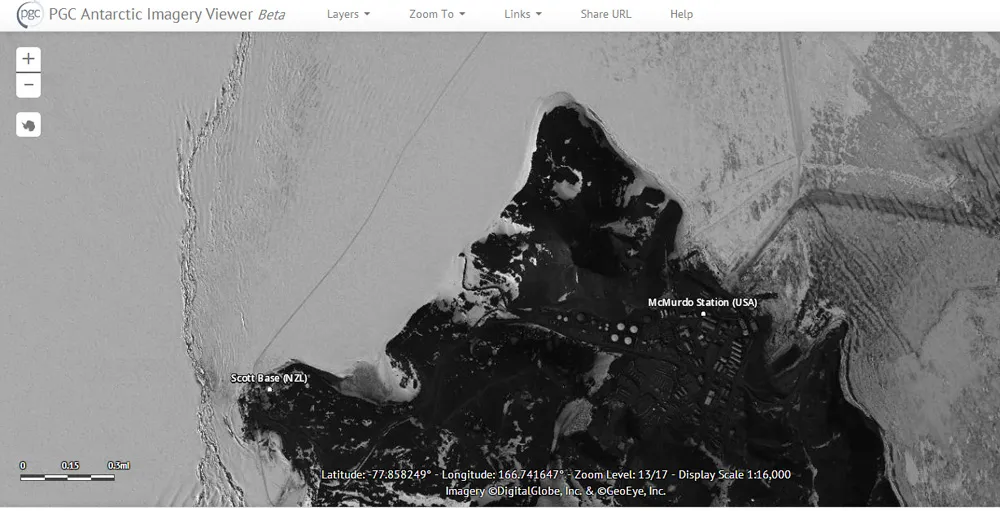

The Polar Geospatial Center Imagery Viewer allows scientists and others with federal funding or affiliations to create maps from a high-resolution satellite mosaic of Antarctica. Pictured here is Ross Island, home to McMurdo Station and Scott Base. Photo Credit: PGC Antarctic Imagery Viewer. Source: Directionsmag.

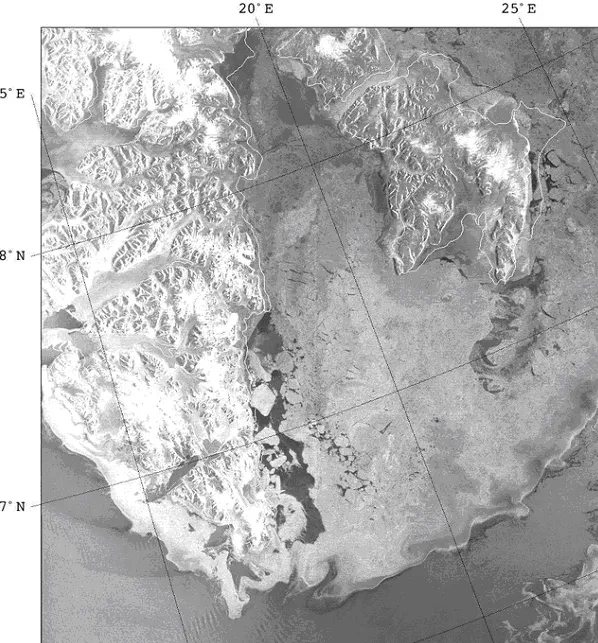

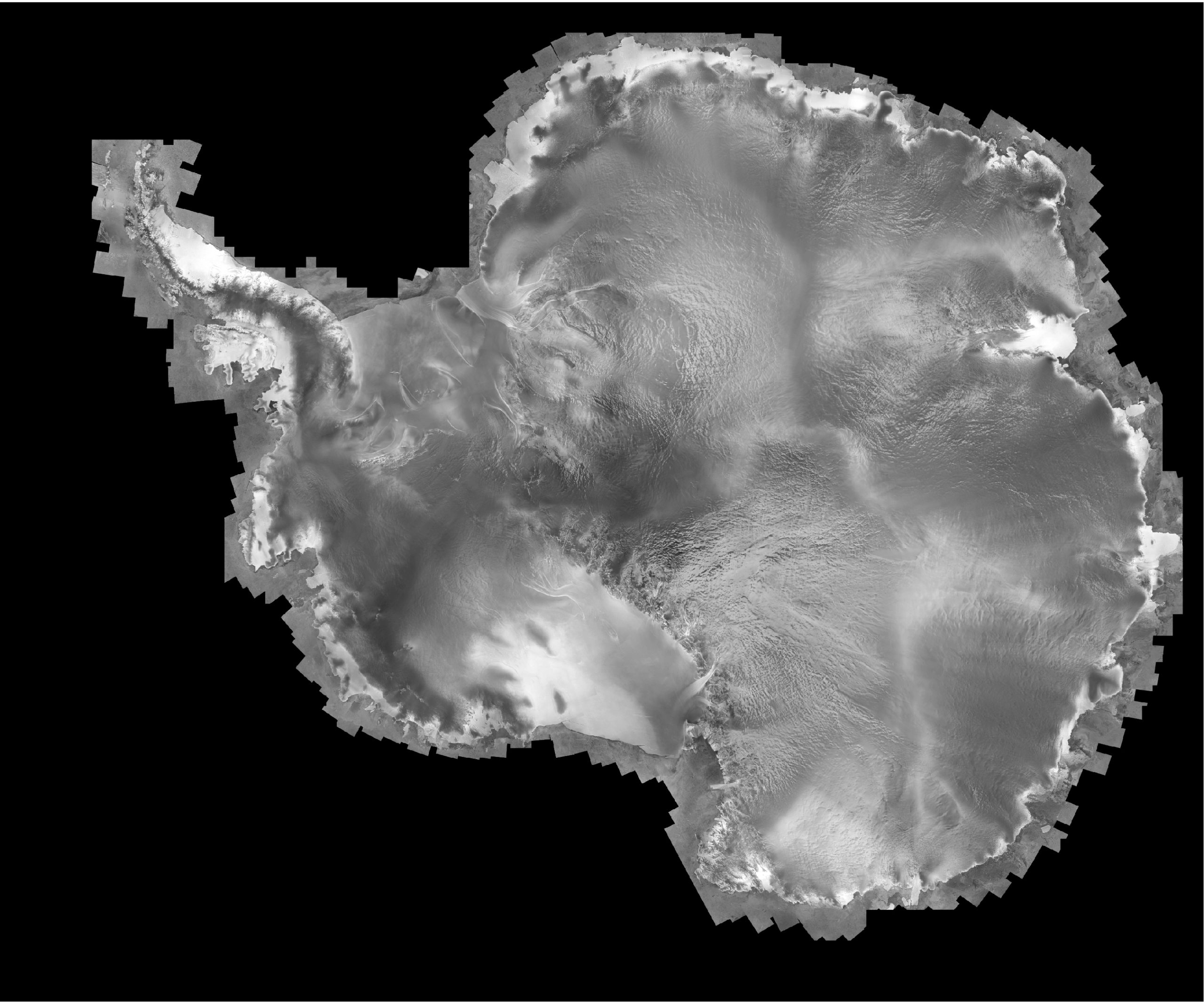

SAR Image from the Antarctic Mapping Mission of RADARSAT-1. The mosaic was coompiled by Byrd polar Research Station. The images were taken in 1997 and have a groudn resolution of 125m. Source: PGC.

Source: Directionsmag

Did you like this post? Read more and subscribe to our monthly newsletter!