How to create a reliable forest inventory using Earth Observation data

Editor’s note: This article was written as part of EO Hub – a journalistic collaboration between UP42 and Geoawesome. Created for policymakers, decision-makers, geospatial experts and enthusiasts alike, EO Hub is a key resource for anyone trying to understand how Earth observation is transforming our world. Read more about EO Hub here.

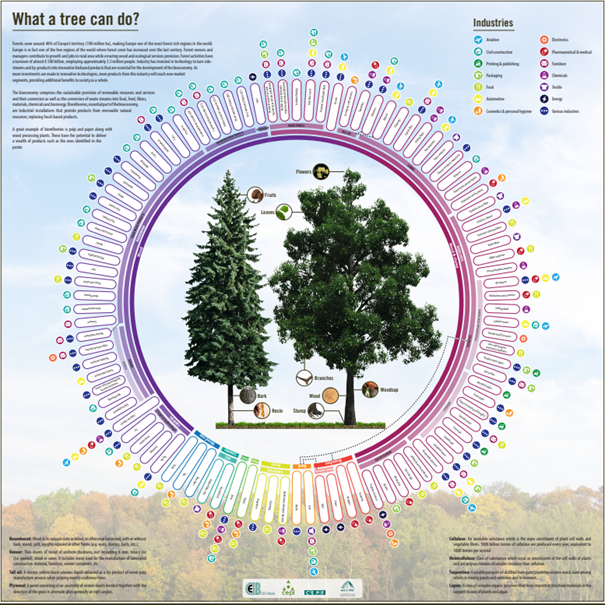

Forests are an essential part of our planet, without which life on Earth would not exist. They are responsible for filtering the water we drink, purifying the air we breathe, preventing erosion, and importantly, slowing climate change. They provide people with critical natural resources from wood to food and shelter for animals around the world.

What a tree can do (Source: CEPI)

The forestry industry is a very important contributor to the national economies of many countries. It provides a multitude of different products including food, fuel, construction materials, medicines and raw materials for further processing. One of the main challenges in forest management is resource sustainability. In 1992, as a result of the UN’s introduction of the Forest Principles at the Conference on Environment and Development in Rio de Janeiro, many companies made changes to their forest management practices. The document called on member countries to promote forestry practices that “meet the social, economic, ecological, cultural and spiritual needs of present and future generations.” Forest companies are facing increasing risks, which can be effectively minimized by ensuring forest biodiversity, facilitating healthy ecosystems, and maintaining and preserving clean soil and water resources. The main threats caused by environmental change include:

– Wildfires

Climate change, land use and land management are affecting the occurrence and severity of wildland fires in many parts of the world.

– Insect Outbreaks

An increase in the frequency and severity of insect outbreaks is leading to the loss of significant forest areas around the world.

– Extreme events

A consequence of climate change is extreme events, which include increasingly frequent extreme winds. It is this, combined with drought, that leads to severe weakening of some tree species, which are then attacked by harmful insects and mold, and die completely.

Overview of core technologies

Traditional management and monitoring of large forests are both time-consuming, labor-intensive and expensive. That’s why new technologies for forest analysis, such as satellites and LIDAR, have begun to be used in recent years. The data acquired using these methods are different, but both technologies offer valuable new data not previously available to forest owners.

LiDAR uses laser light to survey the earth’s surface. The main advantage of this technology is its ability to penetrate the tree canopy, and map individual trees and the ground beneath them. In addition, it can provide highly detailed and accurate results. However, LiDAR has some key drawbacks. The data collection is typically done using airborne technologies, which are labor-intensive and use specialized equipment and aircraft, and also very expensive. Therefore, data updates are infrequent and sometimes take up to several years, potentially resulting in data that may be outdated.

Satellites, on the other hand, provide optical as well as radar data on the characteristics of entire forests. The data refresh rates are much higher compared to LiDAR. Such efficient monitoring allows for a much faster response to events, and also for temporal analysis – monitoring changes over time. The data is, however, mainly limited to what is visible on the top of the forest canopy. Forestry companies are seeing advantages of combining optical and radar data in a wide range of applications, which allows them to observe the effects of extreme weather events and fires, and also time to resolve tree health problems.

To effectively plan and manage forests, there is a need for solutions that provide detailed information on the topics mentioned above. “Access to highly accurate estimates of forest stand structure and composition is fundamental to ensure forest managers can plan and manage forest landscapes sustainably over time and space,” said Bruce MacArthur, President and CEO of remote sensing and forestry industry leader at Tesera in an interview with UP42.

Forestry use cases

Forest inventories enable forest owners and managers to operate and plan in a sustainable and profitable manner. Investment in silviculture requires reliable stand-level data to optimize and maximize growth and, over time, value. Emerging services such as ecosystem services, mitigation banking, and carbon sequestration require high-resolution information to inventory forests, support conservation efforts, and contribute to sustainable development. “There is a need for higher resolution forest inventory data and easier to use forestry solutions which is why we created our suite of High-Resolution Inventory Solutions (HRIS) to help move the forestry industry forward” says Bruce.

Satellites in geostationary orbit provide imaging of fires every 5 to 15 minutes. The equipment records the full impact of fires, from quickly detecting actively burning fires, to tracking smoke transport, providing information for fire management, and mapping the extent of ecosystem changes. Changes can be mapped days, and even decades, after a fire. In addition, locations of new fire outbreaks can be sent directly to land managers around the world.

Typically, foresters must obtain each piece of information from a separate source. That’s why intermediary data companies work well in this case. One of these platforms is UP42, which has started a partnership with Tesera. The partnership will allow forestry customers to quickly and comprehensively acquire specialized data from a single platform: “We have found that satellite data and other remote sensing and geospatial data are really spread out across a number of providers and platforms, all with different degrees of difficulty to obtain and stream into analytics processes. What we really like about what UP42 has done is putting these big datasets into one place with common building blocks to interface with the data for our use cases.” – says Bruce

Another challenge for forestry is related to parasites, particularly the bark beetle, which is a plague on trees in continental regions. Some of the most popular indicators for controlling this pest are the Normalized Differential Vegetation Index (NDVI) and the Moisture Difference Index (EWDI). With these, foresters can easily determine the area where diseased or dead trees are present. To enable quick and efficient forest management, applications with algorithms for automatic classification of forest areas are increasingly being developed to support the forest industry in tasks such as tree inventory. The data is automatically analyzed by the system, and a report then provides information, for example, on the number of trees and their parameters in a selected area.

However, earth observation data is not just about satellites and aircraft. Drones are increasingly being used to acquire highly accurate data. These, combined with artificial intelligence, enable appropriate measures to be taken to protect forests by inventorying them, detecting pests, determining the condition of trees and calculating the areas affected by natural disasters. Thanks to the use of such technology, it is possible to collect data from the hardest-to-reach places.

Earth observation data are also an invaluable aid in high winds. An analysis of forest damage was carried out by Piotr Wężyk from the University of Agriculture in Krakow after the hurricane that took place in the Piska Forest in 2006. The mapping of damaged stands consisted of developing CIR aerial photos and interpreting the damage. The thematic maps that were created were then used to conduct GIS spatial analysis to determine the extent of the damage. Thanks to the continuous development of technology, we no longer have to do all the work separately. The UP42 platform features the Land Lines application, in which an algorithm scans remote sensing images for natural loss. With such platforms providing comprehensive data, forest management using new technologies is available to anyone interested.

“Land Lines application” (Source: TESERA)

Conclusions

The forestry industry, despite numerous threats, has the opportunity to draw the best possible data needed for forest inventories. Thanks to advances in technology, these opportunities are available to all interested customers. As Bruce MacArthur summarized on The Digital Forester podcast: “The quantum change that happened is the fact that people now can use these technologies in innovative ways that were previously limited to top researchers, but now with advanced computing, cloud processing, machine learning and remote sensing, it enables companies to be able to take advantage of them in unique ways that help our clients in ways that were not possible previously.”

Did you like the article? Read more and subscribe to our monthly newsletter!