How the Danish government used AI on satellite data to identify slurry tanks?

For the past few years, I heard a lot about the potential of Deep Learning in the geospatial industry and usage of AI on satellite data to automate analytics and interpretation of raster data. There is a lot of research and some promising startups in that space, but there are not too many large scale success stories with production application of AI that come to my mind, so every such an example catches my attention.

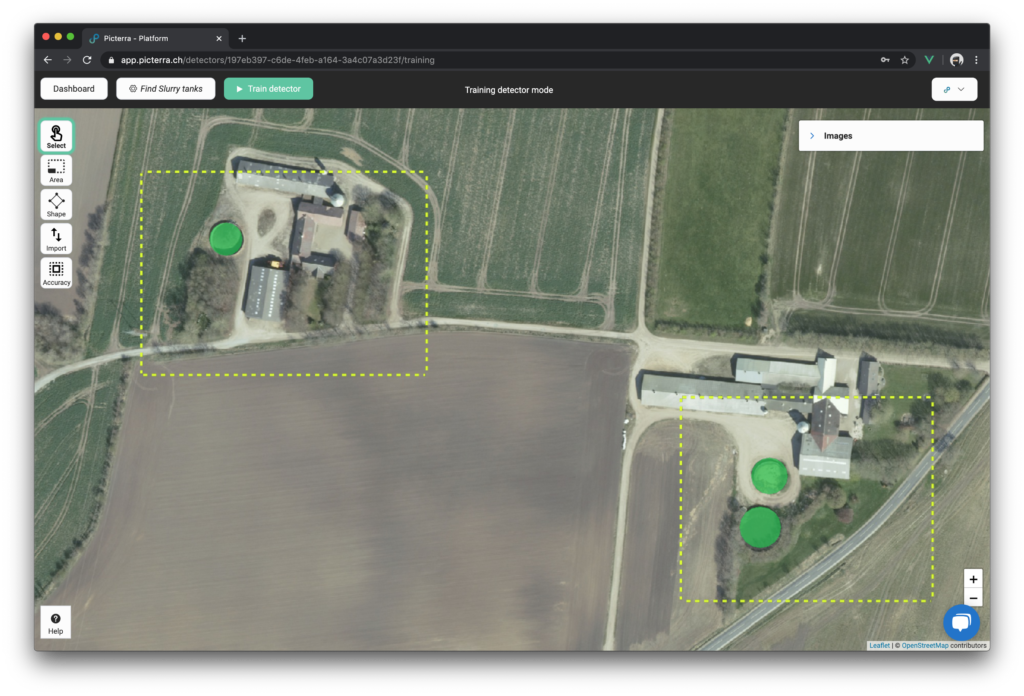

An interesting project has been delivered at the end of the last year using Picterra platform. This Switzerland-based startup offers a cloud-based geospatial tool that enables users to train Deep Learning models on satellite and aerial imagery data without a single line of code. Picterra was used by the Danish agricultural advisory institute SEGES that has been tasked by the government to measure the level of the total country’s emission of ammonia.

The ammonia emissions happen mostly from so-called slurry tanks which are these large circular concrete structures where farmers gather all their animal waste. In addition, the emission is lower for containers having covered rooftops. As there were no data on the presence of the slurry tanks available and counting it manually on 34000 farms would take months, it seemed like a perfect use case for the object detection task.

SEGES had access to two critical data sources perfect for Deep Learning applications:

- A WMS imagery server covering the whole of Denmark at 25 cm of spatial resolution (1TB of data)

- Centroids of the 34.000 farms to be investigated

The first one was a great source of data with consistent quality over the entire country. The second one could be used to crop the whole dataset to areas of interest rather than to run the analytics over the entire dataset. The data has been plugged to Picterra, and the training datasets have been created.

Interestingly, Picterra builds their platform around the concept of low-shot learning which aims to deliver good results with a low number of labelled training data. The platform has a set of pre-trained models, training data augmentation workflows and a well-structured GPU architecture that allows you to apply transfer learning effectively and quickly build new classes of objects to be detected on the top of existing models. Once you have even a few objects of each class labelled, you don’t need to wait for hours to test the model, but you get the results within minutes to understand how much training data is still required.

FYI. If you have ever played with Deep Learning and you don’t have a fully automated data pipeline, you will understand how painful and time consuming the process of labelling, training and testing the models really is.

For the sake of this job, SEGES had prepared labels of just 56 slurry tanks with two classes of objects representing covered and uncovered reservoirs. Based on this input, the engine detected about 26k of slurry tanks with high levels of confidence (Recall > 90% and Precision > 85%). Based on the data the heatmap of emissions has be created.

The project is a great example showing that Deep Learning is already changing the way how geoscience is done. Until recently, the entry barrier to applying neural networks in our geoanalytics workflows has been too high. It required expensive GPU server setup, data scientists that would be able to develop data pipelines in a geospatial environment and tons of training data. With projects like Picterra, Deep Learning started to be accessible to the geospatial community… and guys from Picterra have just released a QGIS plugin to make it even easier for all of us.

Play around with the plugin and let me know your thoughts in the comments below.

Did you like the article about application of AI on satellite data? Read more and subscribe to our monthly newsletter!

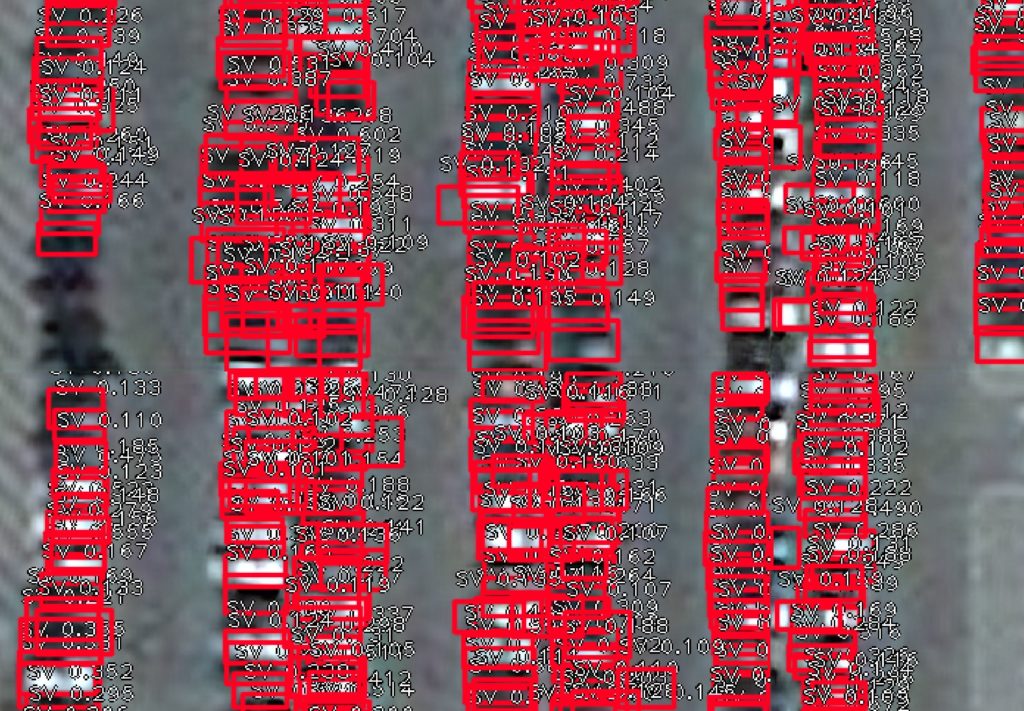

or some cars mixed with rooftop infrastructure:

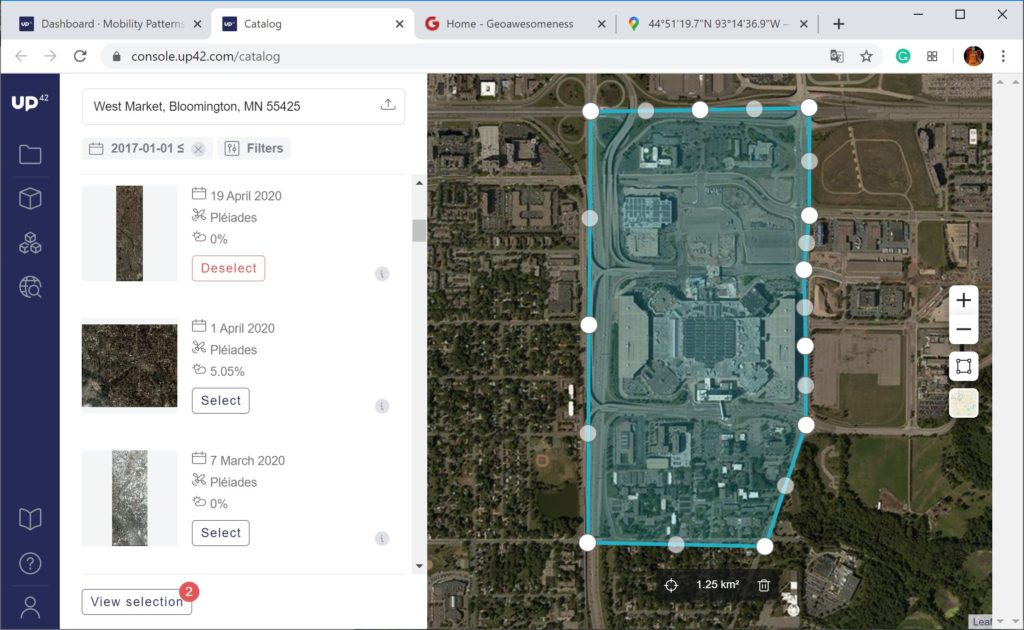

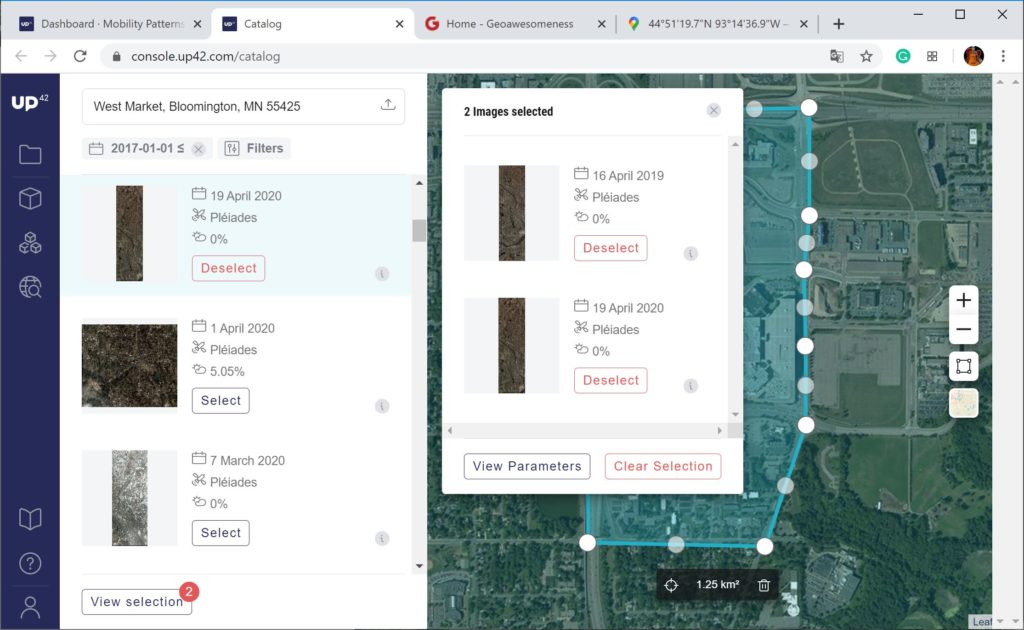

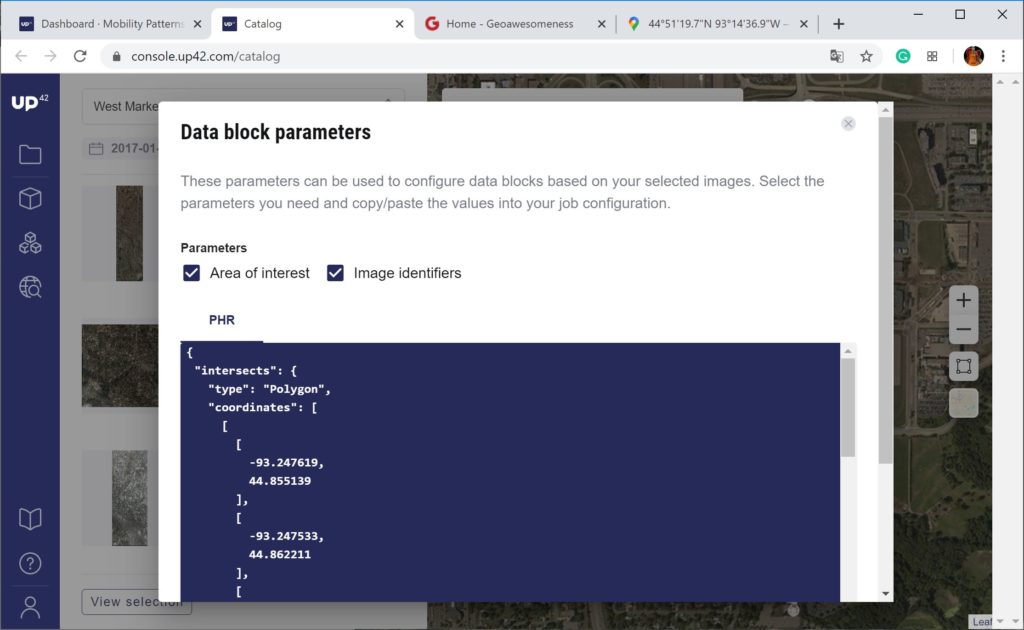

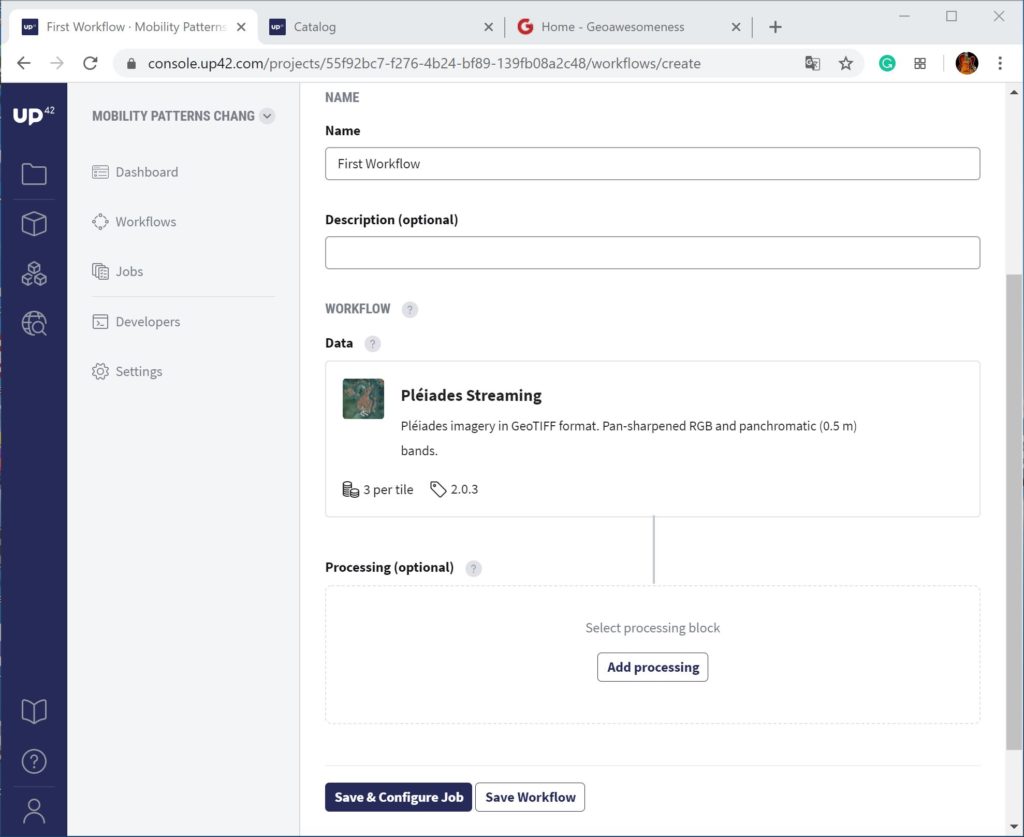

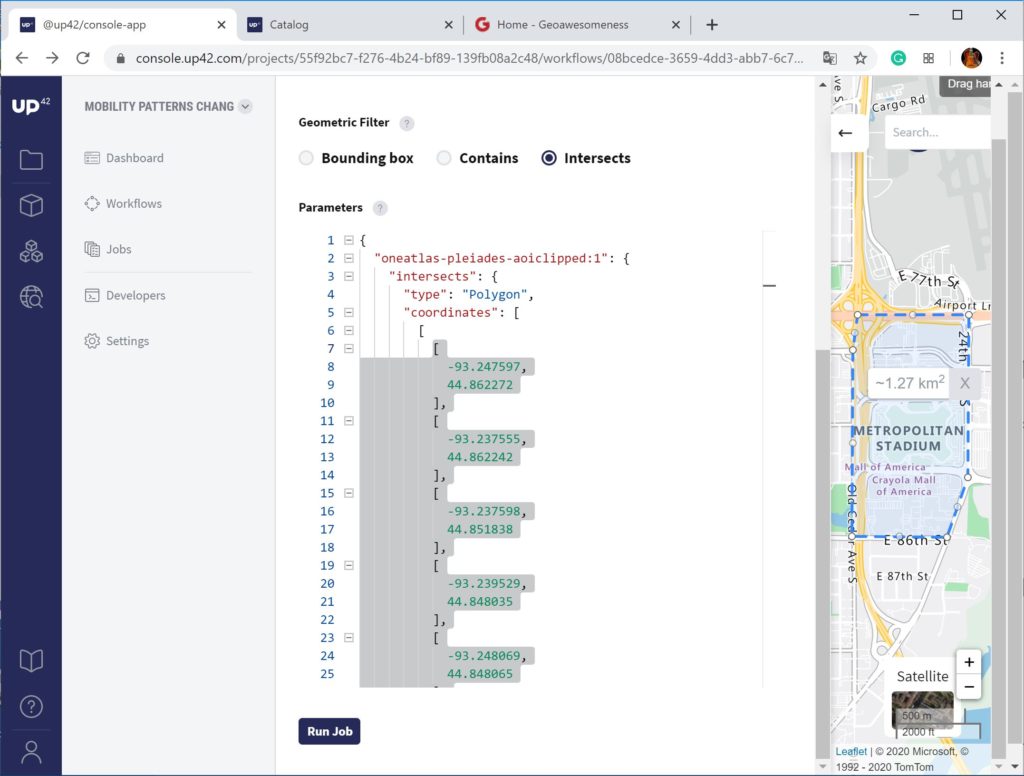

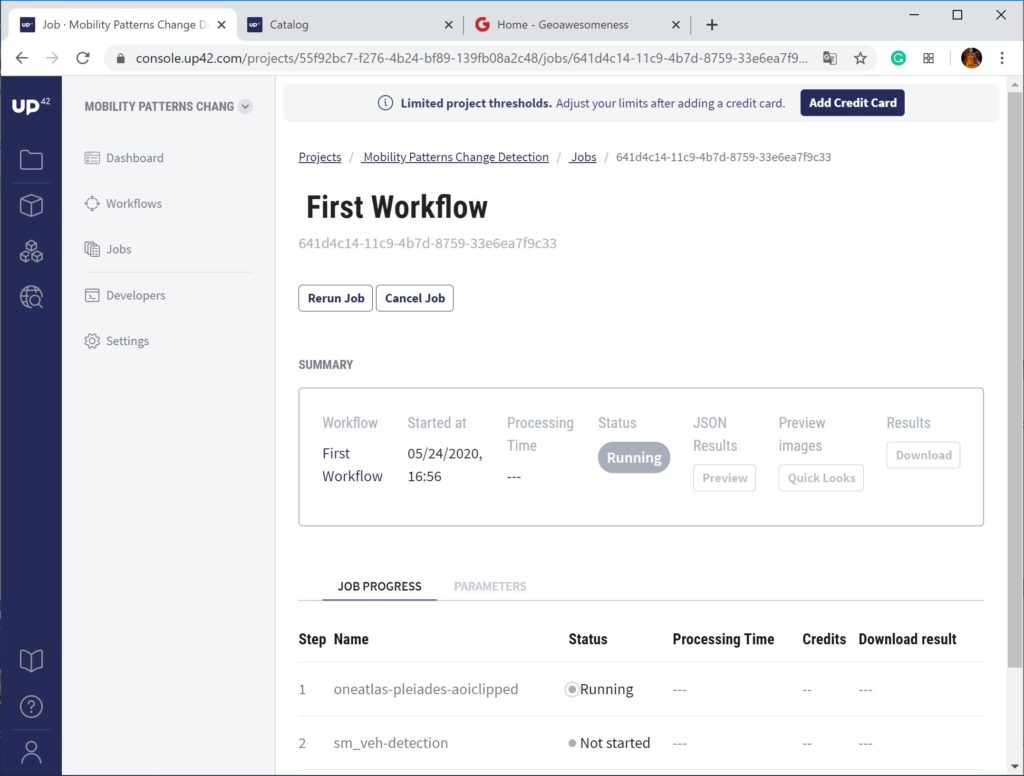

or some cars mixed with rooftop infrastructure: These case would, however, had a quite low confidence level which fortunately is a parameter of every objected detected. The average confidence level of all observations in my datasets was at the level of 0.25 for 3001 objects, so I decided to filter out objects with the confidence level below 0.5. and I was left with 327 vehicles detected on April 19, 2019 and 109 on April 16, 2020 which is 3x drop!

These case would, however, had a quite low confidence level which fortunately is a parameter of every objected detected. The average confidence level of all observations in my datasets was at the level of 0.25 for 3001 objects, so I decided to filter out objects with the confidence level below 0.5. and I was left with 327 vehicles detected on April 19, 2019 and 109 on April 16, 2020 which is 3x drop!