Processing “tons of data / big data” while you are sleeping: GDAL and Shell Scripting

Handling and processing big data is awesome but always questions the physical system capacity, dataset processing software, memory availability, efficient algorithm, processing time and so. If any of these go wrong Oh! dear, you are in trouble with big data.

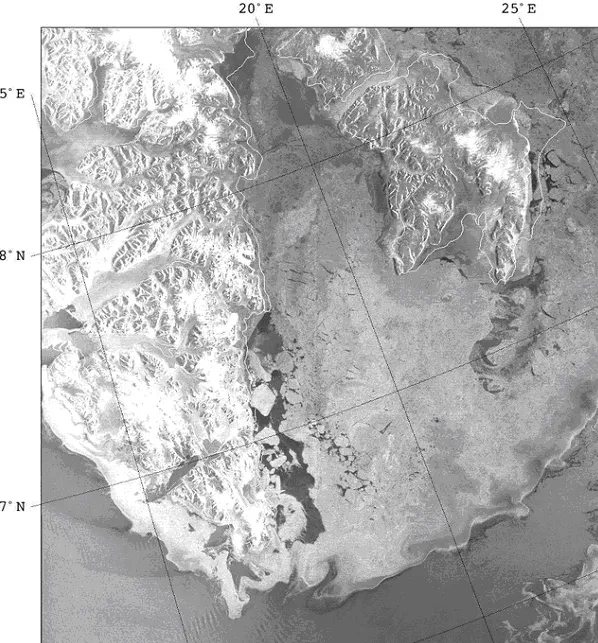

Among geo-people, its very common, dealing with satellite/raster/gridded data. Now-a-days most of freely available huge datasets comes in grid format and if it’s an spatio-temporal data set Oh! boy it could be TB of data with thousands millions of records and thats a real nightmare. For an instance, GRidded Information in Binary (GRIB) data; basically weather data dateset, very useful for any sort of research involving temperature, humidity, precipitation and so. You can get the daily data for free in 0.5×0.5 degree spatial resolution, for whole world. So, now you know where to get huge amount of free data but question is how to process this data mass?

To make the long story short, lets pick a question to answer, “how can we create a weekly long term average (LTA) from 20 years of daily data? And what we need to make the processing efficient?” – simeplest way would be using shell script and make use of gdal library. Following is some steps and directions:

first to download the daily dataset using grib api (and …maybe with little bit of shell script for naming and organizing the data for more convenience) for the time period and perimeter we are interested in. then create a grid using gdal_grid function and make geoTIFF or any other format you are interested in (we also can do point wise calculation and grid it at the end)

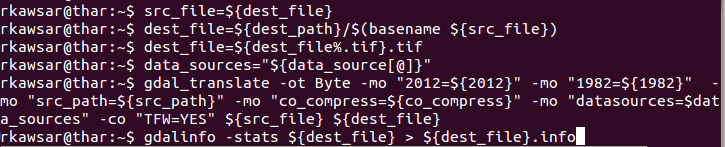

for convenience we can define three directories src_path (where the geoTIFFs are), stage_dir (this is staging area where the intermediate processing file will be kept) and dest_path (where we want to find output). Besides bash script here I have used some gdal functions like that gdal_calc.py, gdalinfo and gdal_translate etc. details are discussed bellow:

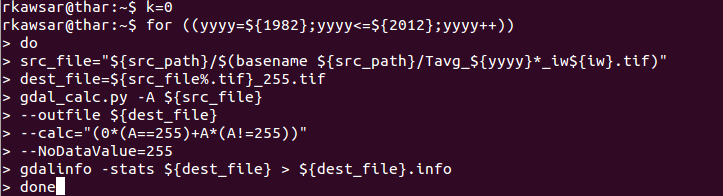

# its always a question while processing raster datasets how to deal with no data pixels. here’s a solution how to assigning 255 as NoDataValue to different unwanted pixels:

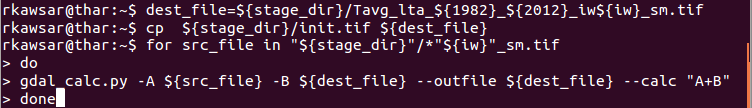

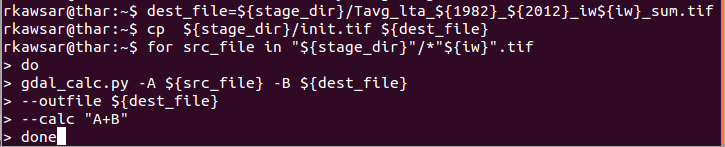

# To create a long term Temperature average its necessary to summarize the weekly Tavg images. we do that here:

# To create a long term Temperature average its necessary to summarize the weekly Tavg images. we do that here:

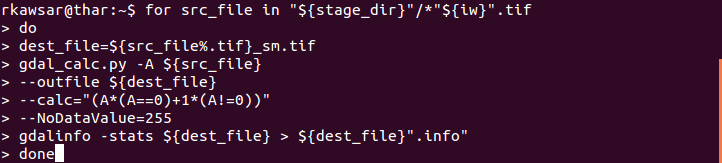

# after we have created the summarized image its necessary to create status bitmap image (SM) with 0 for unusable and 1 for usable pixel values; using this SM image we can exclude the unwanted pixels from the LTA estimation:

# after we have created the summarized image its necessary to create status bitmap image (SM) with 0 for unusable and 1 for usable pixel values; using this SM image we can exclude the unwanted pixels from the LTA estimation:

# Now we have to summarize the status bitmap (SM) images:

# Now we have to summarize the status bitmap (SM) images:

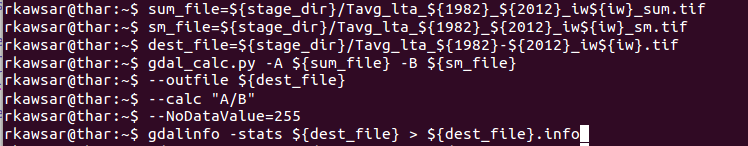

# finally we use the summarized Tavg image and SM image and create the long term image average (LTA) and save it in destination folder:

Here the scripting ends. now you can use these scripts and use some global variables like International Week (iw) and put it in a loop. Run the script than go to party and sleep tension free 🙂 . hopefully in next days you will get tons of data processed and stored in the destination folder.

Did you like this article? Read more and subscribe to our monthly newsletter!