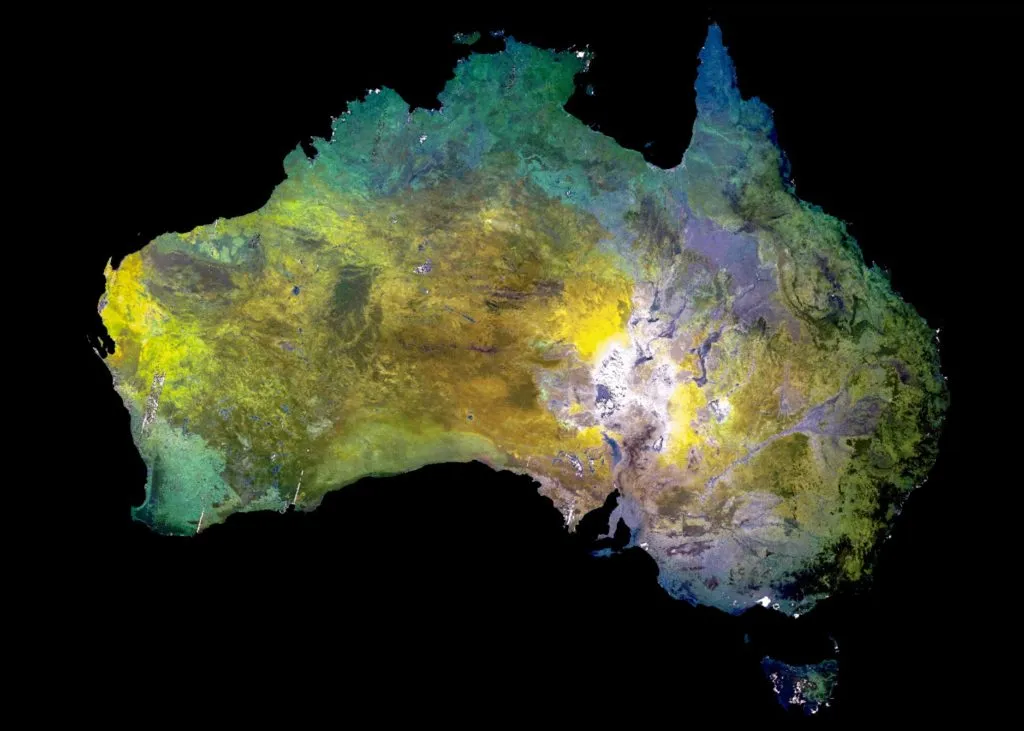

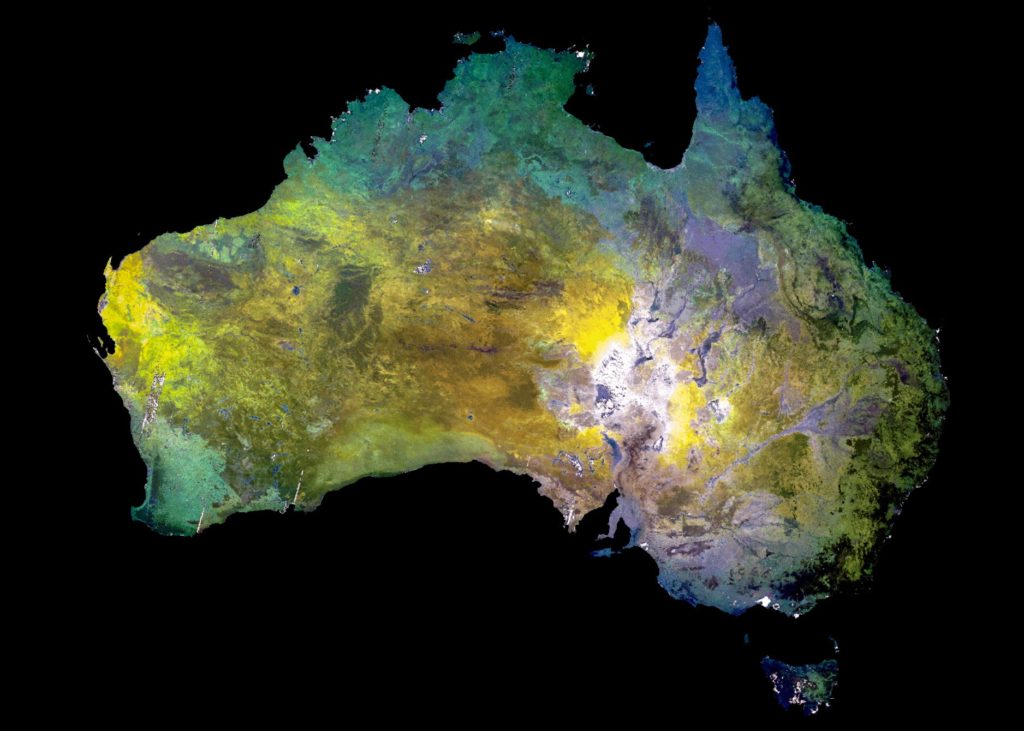

Descartes Labs created a barest Earth composite layer to accelerate continental mineral exploration, based on the ASTER satellite. It provides 14 different bands of the electromagnetic spectrum, ranging from visible to thermal infrared light. This spectral density provides input data for automated classification of surface mineralogy.

As a spatial analytics firm that uses machine learning and artificial intelligence to detect objects and patterns hidden inside petabytes of remotely sensed data, Descartes Labs has been creating custom geospatial AI solutions for global enterprises for quite some time. But now, the Santa Fe, New Mexico-based company is making its platform and tools publicly available so even non-traditional users can leverage geospatial AI as a core business competency.

The Descartes Labs Platform has been designed to handle nearly all geospatial modeling functions through a single cloud-based solution. It is made up of three components:

- Data Refinery: That hosts petabytes of analysis-ready geospatial data with the ability to rapidly ingest, clean, calibrate and benefit from any internal or third-party data source

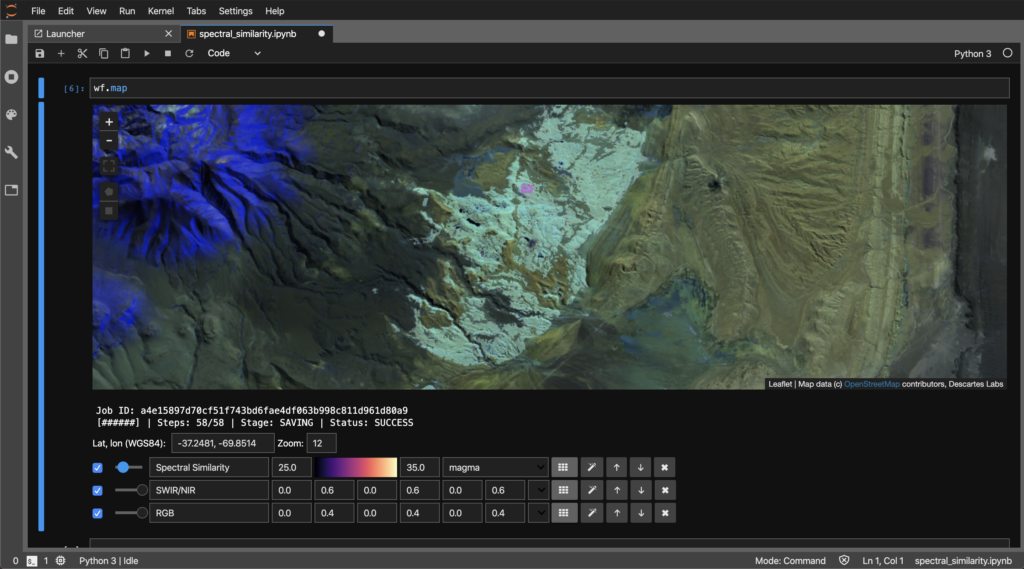

- Workbench: A cloud-based data science environment that combines the Descartes Labs Platform APIs, visualization tools, and a model repository with a hosted JupyterLab interface

- Applications: To give users the ability to rapidly deploy models and applications

The Descartes Labs Workbench can prioritize areas for mineral exploration

The company believes that its solution can prove especially critical for commodity-focused companies facing sustainability and efficiency challenges. It would allow organizations to quickly evaluate the output of models, speed development and proof-of-concept creation, and transform their decision-making, ultimately, saving millions of dollars in time and money.

To understand how the platform will unlock the power of global-scale geospatial data for organizations that have never been able to access it before, we talked to Sam Skillman, head of engineering at Descartes Labs.

What are some of the challenges that you strived to solve while building custom geospatial AI solutions for some of your early customers? How have those lessons been incorporated into the Descartes Labs Platform?

Due to the complex nature of geospatial data, many businesses have hit roadblocks when trying to apply geospatial AI at an enterprise-scale on their own. While this data adds invaluable insights not possible from other sources, it can also be challenging to acquire and work with.

It has traditionally been very hard to find, access, and utilize remote sensing data from satellites or aerial sensors, especially at a large scale and across multiple data sources. The data refinery component of the Descartes Labs Platform really makes data aggregation, normalizing and filtering easy for customers.

It can also be difficult for data scientists to build robust modeling environments that interact quickly and easily with storage and compute resources. We’ve made a lot of progress in solving this by building a hosted modeling environment using JupyterLab that sits next to the Descartes Labs Platform APIs. Having the data readily available in the data refinery, with quick and easy access to the modeling environment in workbench, all powered by the best cloud compute and storage resources makes Descartes Labs a trusted vendor that can solve business problems for companies of all sizes.

We developed the Descartes Labs Platform to give data scientists and their line-of-business colleagues the best geospatial data and modeling tools in one complete package, something no other company has successfully done before. The platform is the first-ever comprehensive cloud-based geospatial analytics platform that provides the data, scalable compute, and modeling tools all in one solution that helps turn AI into a core competency. As a result, data scientists are able to rapidly model using data that is already cleaned, processed and ready for use, all the while having the flexibility to add their own data and ultimately transform their decision-making capabilities.

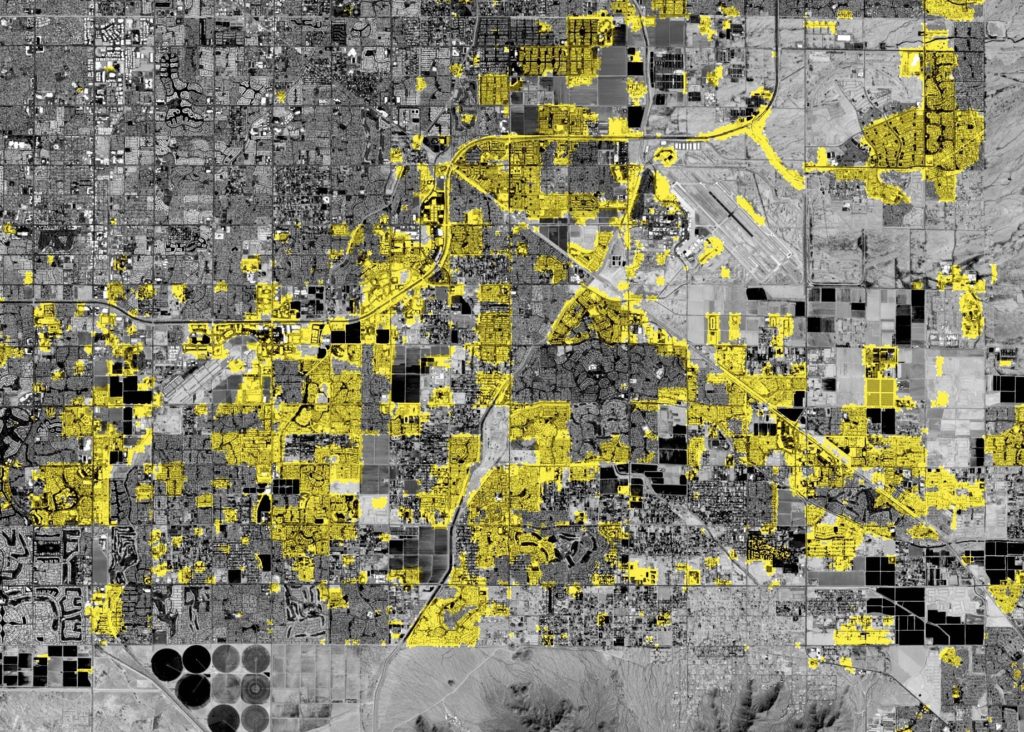

Descartes Labs Workbench imagery above shows urban expansion in Phoenix over the last decade detected using Landsat satellite images. Visualizing these changes can provide insights for urban planning

Our aim when building the platform was to develop custom geospatial AI solutions for major businesses, proving out multiple use cases for the platform technology. By enabling the rapid and collaborative development of global-scale commodity analytics, forecasts, monitoring, and exploration capabilities, we are able to help enterprises understand the physical world at scale in near real-time.

Give us a real-world example of how an industry could benefit from your platform.

The drive for continuous improvement in physical forecasting and prediction has become a primary goal for producers and consumers across the full spectrum of global trade. This is especially true in 2020, as companies face more rigorous sustainability and efficiency challenges from input materials, manufacturing, or downstream product use. In many cases, sustainability and efficiency are two sides of the same coin. Companies often find it difficult to generate revenue and improve margins without making progress on both fronts.

Through geospatial data and predictive analytics, the platform provides transparency into supply chains and the larger market, supporting key business decisions. The modeling tool enables forecasting capabilities across industries, including agriculture, energy, sustainability, mining, shipping, financial services, and insurance, to facilitate everything from agricultural monitoring to mineral exploration. This is especially critical for commodity-focused companies facing sustainability and efficiency challenges, saving them millions of dollars in time and money by transforming the business quickly and cost-effectively.

We have energy customers using the platform today to solve all sorts of business problems. These might be as simple as having one system to aggregate and serve geospatial datasets to multiple users and applications in a rapid manner, to specific workflows like pipeline encroachment monitoring, well pad detection, or methane monitoring. The combination of having source data across all different resolutions and timescales, coupled with easy to work with modeling tools, and powerful storage and compute, means that energy users can start building production models quickly and without requiring as much domain-specific expertise or cloud computing experience.

This image shows well pad locations, which can help detect methane leaks, in the Permian Basin detected on the Descartes Labs Platform in high-resolution imagery

Let’s talk about the trends driving the geospatial AI revolution…

Natural resources and supply chain management have become more global, competitive, and increasingly subject to sustainable investing standards. The rise of climate-related financial risk investing is changing incentives for public companies. At the same time, supply and demand shocks are being priced-in more rapidly and volatility is lower in today’s highly connected global environment. Adverse weather and changing environmental conditions make the picture even more opaque. They pose risks to physical assets and increase the need for more accurate assessments of insured value. With as many holes as the global picture has, these uncertainties are magnified when it comes to the impact on your market, the value of your products, your costs, and the risks to your bottom line. Most critically, the opportunity to decide and act is dramatically shorter today than even just a few years ago. The market is learning faster and faster.

When combined with the incredible growth and variety of remote sensing instruments being launched or deployed, there is tremendous opportunity to combine very disparate datasets to answer some of today’s most important questions.

Geospatial AI can help resolve these challenges, and so has become more of a requirement for commodity-focused companies. Especially as many face ongoing sustainability and efficiency challenges, it’s becoming harder and harder to improve margins without this capability. In this context, we equate geospatial AI with the prediction of phenomena in the physical world.

Esri: Geo-App Developer

Esri: Geo-App Developer MasTec: GIS Manager

MasTec: GIS Manager Wing Aviation: GIS Engineer

Wing Aviation: GIS Engineer Monmouth County Planning Board: GIS Specialist

Monmouth County Planning Board: GIS Specialist KBR:Remote Sensing Scientist

KBR:Remote Sensing Scientist Asteria Aerospace: Senior GIS Engineer

Asteria Aerospace: Senior GIS Engineer